In my CS program, my professor has claimed that NAND gates are the

most basic gate to engineer, and so every other gate and higher-level

circuits found in CPUs are made from NAND gates

“Yeah? Well, you know, that's just like, uh, your opinion, man.” - The Dude

Your professor is … not correct, on several levels.

Let's unpack this.

NAND gates are the most basic gate to engineer

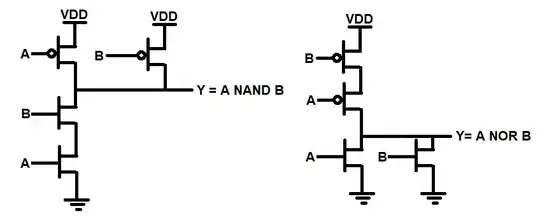

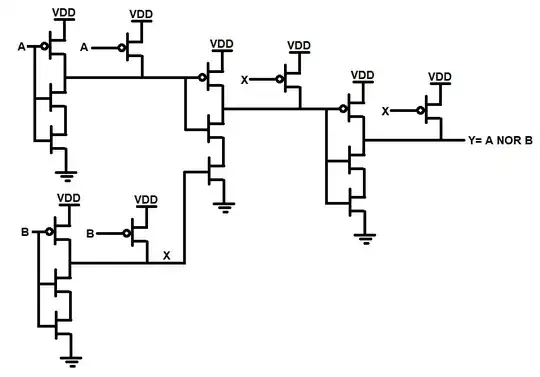

No, they're not. That would be the NOT gate. NAND and NOR are comparably complex, each needing at least two transistors using a pull-up (in CMOS, each type takes four.)

so every other gate and higher-level circuits found in CPUs are made from NAND gates

The identical claim can be made for NOR, as it is equally complex as NAND and can implement the same palette of functions through application of DeMorgan's Theorem.

So the prof's claims are neither true in theory, nor in practice as we'll see below.

The prof may be quite surprised to learn that a very important early CPU built using a single gate type (and quite possibly, the only one of such construction, ever) exists in the Apollo Guidance Computer (AGC). This machine's logic was built entirely from just one IC: a dual 3-input (positive-logic) NOR. Not a NAND in sight, nor (pun intended) in flight.

More here: http://www.righto.com/2019/09/a-computer-built-from-nor-gates-inside.html

And their rationale for choosing that IC: https://www.ibiblio.org/apollo/hrst/archive/1716.pdf.

In the most general sense, if you have just two things: inversion, and any kind of Turing-complete base 2-input function, you have the ability to make any kind of logic of any complexity. DeMorgan’s Theorem tells us so. NOR and NAND by themselves satisfy this, as well AND+NOT and OR+NOT. Choose one, or a combination, they all work.

Do people still make things out of a single type of gate, be it NAND or NOR? Not so much. Gate arrays or sea-of-gates are extreme examples of this idea, and yes these can be based exclusively on a single inverting logic type like NAND or NOR. Gate arrays have limits, inefficiencies and costs; and as a consequence aren’t so popular anymore. They've been largely supplanted by microcontrollers, CPLDs, FPGAs and of course ASICs.

For the hierarchy of chip structures (that is, levels of abstraction), we have as you stated transistors and interconnect wires as the the lowest level. What's the abstraction level above that, then? We’ll get to that, I promise.

In the salad days of chip design (think of Jack Kilby, Robert Noyce), the answer was… not much. Transistors and interconnect were laid out polygon-by-polygon and layer-by-layer, by hand (first in rubylith, then later by physical CAD software), to capture the design and create a mask set. This practice originated in bipolar and persisted well into the development of MOS technology.

With the publication of their 1978 paper (and later their book based on it), Carver Mead and Lynn Conway changed all that with their structured methodology based on MOS. Among other ideas, they introduced the concept of standard cells, a set of pre-defined functional primitives that can be re-used from chip to chip, and just as importantly, from process to process. Soon, this set of functions came to be offered as a standard cell libraries by the process vendors, characterized on their target process for guaranteed performance.

Because the fab vendor guarantees the cells' performance, using the standard cell library flow provides a ‘clean handoff’ from the designer to the foundry that was all but impossible to achieve using the polygons-in-rubylith methods of the 1960s and 70s. (Tip o’ the hat to those early pioneers that did it; the effort needed to make even the simpler chips of the time was huge.)

This philosophy of design and re-use, enabled by Mead and Conway's structured design and the standard cell libraries to support it, is what kicked chip design into high gear in the 1980s.

Mead and Conway’s book Introduction to VLSI Systems is still available, and although it focuses on NMOS it is still relevant today.

Back to the present, and your question. With contemporary CMOS design, besides the base and complex gates, you see more use of transmission gates to construct logic. It could be said for CMOS that gate primitives, transmission gates and sequential storage are the basic logic-level pieces above individual transistors and wires. Gate primitives will include NAND, NOR and NOT, as well as efficient transistor-level implementations of more-complex elements like XOR/XNOR, AOI (AND-OR-INVERT), multiplexers and arithmetic. Transmission gates, buffers, latches and flops round out the rest of the low-level library.

The AOI / OAI gates deserve special mention. These structures are very efficient in CMOS, requiring at minimum only 6 transistors. They are very handy for rendering sum-of-product or product-of-sum logic.

Bigger ‘regular’ functional units like RAMs and ROMs are also offered, being designed from the transistor level and characterized as blocks. These are usually handled as parameterized macros that are auto-generated based on user inputs (depth, width, # of ports, etc.)

More about standard cell libraries here: https://www.eng.biu.ac.il/temanad/files/2017/02/Lecture-4-Standard-Cell-Libraries.pdf

Do people still 'push polygons'? Sure. Critical-path blocks benefit from transistor-level hand-tweaked design to achieve higher performance, greater density or lower power. Mixed-signal blocks may also be done at least partially by hand. You can think of these as 'custom' cells that a designer may re-use from project to project, but nevertheless will also be characterized for a specific process as add-ons to the standard library.

Aside from those hand-made edge cases, chips are built using synthesis based on the standard cell library, with the designer’s main work being architecture, logic design and synthesis, as well as partitioning and placement - all to achieve the target performance using the least possible die area and at acceptable power.

In short, and at long last, in modern chip design the standard cell library is the next level of abstraction above transistors and wires that most designers interact with to create chips.

What's the next layer above the standard cell library to 'program' the CPU? Depends on the CPU. There might be PLAs (Programmable Logic Arrays), microcode ROMs or some other mapping structures that would be mask-programmed by rearranging only one or two contact layers. Such blocks allow making changes / fixing bugs without doing an entire base-layer tapeout. They can shorten schedule and reduce prototyping cost, at the expense of increased area compared to synthesized logic.