While creating a 250GiB backup partition for my data, I have noticed lots of discrepancies between reported partition size and free space in Nautilus, gParted, df, tune2fs, etc.

At first I thought it was a GiB / GB confusion. It was not.

Then I thought it could be ext4's reserved blocks. It was not.

Im completely puzzled. Here are some images. Here are the steps:

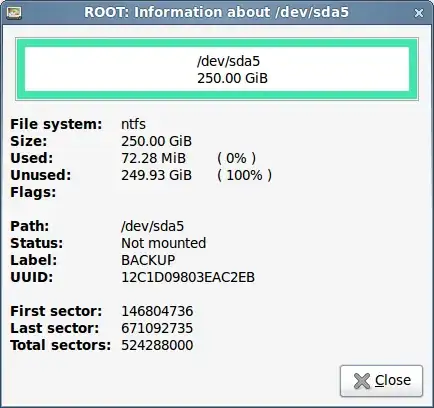

- First, NTFS. 524288000 sectors x 512 bytes/sector = 268435456000 bytes = 268.4 GB = 250 GiB.

Nautilus say "Total Capacity: 250.0 GB" (even though its actually GiB, not GB). Apart from that minor mislabeling, so far, so good

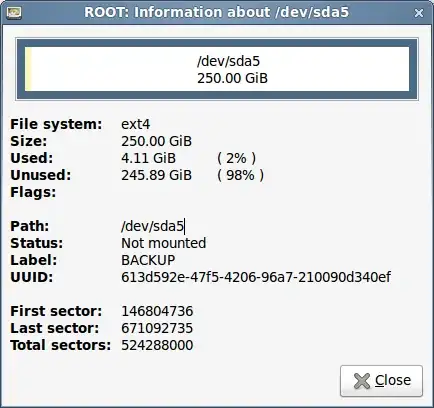

- Now, same partition, formated as ext4 with gparted:

First, Last and Total sectors are the same. It IS the same 250GiB partition. Used size is 4.11GiB (reserved blocks maybe?)

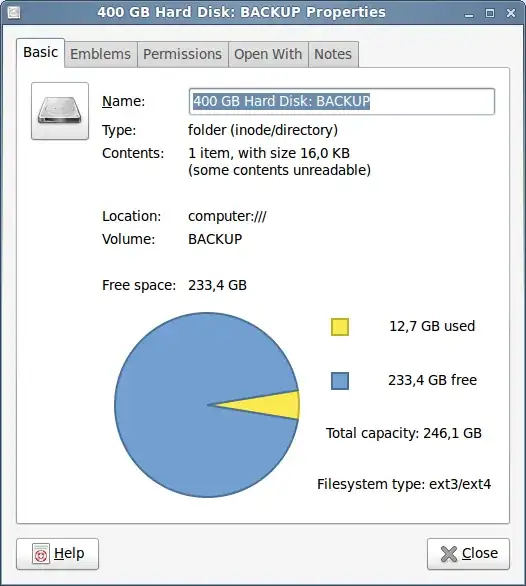

Nope. Looks like reserved blocks are 12.7 GiB (~5%. ouch!). But... why Total Capacity is now only 246.1 GiB ???. That difference (sort of) matches the 4.11 GiB reported by gparted. But... if its not from reserved blocks, what is it? And why gparted didnt report that 12.7GiB of used space?

$ df -h /dev/sda5

Filesystem Size Used Avail Use% Mounted on

/dev/sda5 247G 188M 234G 1% /media/BACKUP

df matches Nautilus in reported free space. But.. only 188M used? Shouldnt it be ~12GB? And total capacity is still wrong. So i ran tune2fs to find some clues. (irrelevant output is ommited)

$ sudo tune2fs -l /dev/sda5

tune2fs 1.41.12 (17-May-2010)

Filesystem volume name: BACKUP

Filesystem UUID: 613d592e-47f5-4206-96a7-210090d340ef

Filesystem features: has_journal ext_attr resize_inode dir_index filetype extent flex_bg sparse_super large_file huge_file uninit_bg dir_nlink extra_isize

Filesystem flags: signed_directory_hash

Filesystem state: clean

Filesystem OS type: Linux

Block count: 65536000

Reserved block count: 3276800

Free blocks: 64459851

First block: 0

Block size: 4096

65536000 total blocks * 4096 bytes/block = 268435456000 bytes = 268.4 GB = 250 GiB. It matches gparted.

3276800 reserved blocks = 13421772800 bytes = 13.4 GB = 12.5 GiB. It (again, sort of) matches Nautilus.

64459851 free blocks = 264027549696 bytes = 264.0 GB = 245.9 GiB. Why? Shouldnt it be either 250-12.5 = 237.5 (or 250-(12.5+4.11)=~233) ?

Removing reserved blocks:

$ sudo tune2fs -m 0 /dev/sda5

tune2fs 1.41.12 (17-May-2010)

Setting reserved blocks percentage to 0% (0 blocks)

$ sudo tune2fs -l /dev/sda5

tune2fs 1.41.12 (17-May-2010)

Filesystem volume name: BACKUP

Filesystem UUID: 613d592e-47f5-4206-96a7-210090d340ef

Filesystem features: has_journal ext_attr resize_inode dir_index filetype extent flex_bg sparse_super large_file huge_file uninit_bg dir_nlink extra_isize

Filesystem flags: signed_directory_hash

Filesystem state: clean

Filesystem OS type: Linux

Block count: 65536000

Reserved block count: 0

Free blocks: 64459851

Block size: 4096

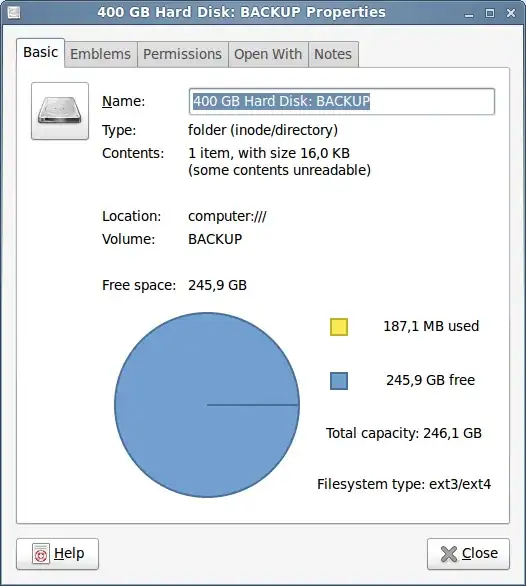

As expected, same block count, 0 reserved blocks, but... same free blocks? Didnt I just freed 12.5 GiB ?

$ df -h /dev/sda5

Filesystem Size Used Avail Use% Mounted on

/dev/sda5 247G 188M 246G 1% /media/BACKUP

Looks like I did. Avaliable space went up from 233 to 245.9 GiB. gparted didnt care at all, showing exactly same info! (useless to post an identical screenshot)

What a huge mess!

I tried to document it as best as I could... So, please can someone give me any clue on what's going on here?

- What are those misterious 4.11 GiB missing from NTFS -> ext4 formatting?

- Why there are so many discrepancies between gparted, Nautilus, tune2fs, df?

- What is wrong with my math? (questions in bold scattered this post)

Any help is appreciated. While I can not figure what is going on, I am serilously considering giving up on ext4 in favor of NTFS for everything but my / partition.

Thanks!