What do I want to do?

I want to measure the gravitational acceleration $g$.

To do so I measure the fall time

of an object from a height $h$, which I have measured previously up to a uncertainty $\sigma_h$.

I repeat this $n$ times and get $n$ different fall times $T_1, ... , T_n$.

For sake of simplicity assume that these fall times have been measured exactly without a systematic measurement error, so that the only estimate for their uncertainty is their standard deviation.

What is my problem?

I can now come up with two different methods for combining my measurements to find a value for $g$. Obviously theory predicts $g=\frac{2h}{T^2}$.

Method 1

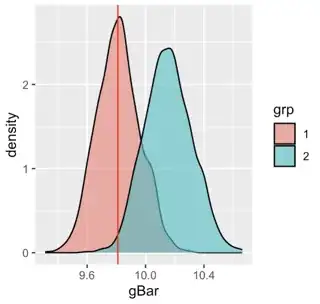

I first average my different time measurements $$\bar{T} = \frac{1}{n} \sum_i T_i,$$ calculate my best estimate for the uncertainty on the mean $$\sigma_{\bar{T}} = \sqrt{\frac{1}{n(n-1)}\sum_i (T_i-\bar{T})^2},$$ plug the nominal values for $\bar{T}$ and h into the above formula for $g$ to calculate my nominal value for $g$ and then do a gaussian error propagation to arrive at my result with uncertainty.

Method 2

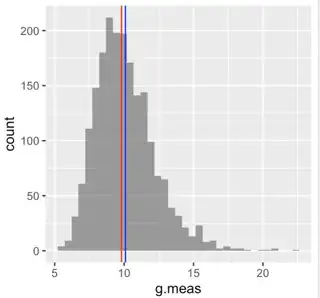

I calculate $g_i$ for each of my measurements $T_i$. I then average the $g_i$. I find my statistical uncertainty in $\bar{g}$ by calculating $$\sigma_{\bar{g}}^{\text{stat}} = \sqrt{\frac{1}{n(n-1)}\sum_i (g_i-\bar{g})^2}.$$ I would then calculate my 'systematic' or correlated uncertainty caused by the uncertainty in $h$ by doing a Gaussian error propagation (or by parameter shifting?) but this time only in $h$.

Professors opinion/Conclusion

My professor told me that method 2 is invalid because the $g_i$ wouldn't be independent from each other (I assume method 2 then breaks down because i cant use

the simple formula for the error on the mean of the $g_i$ if they are dependent, correct?). This dependence stems from the inclusion of $h$ in the formula for the $g_i$. But wouldn't this affect all measurements the same, essentially only shifting the $g_i$ by some factor, and wouldn't it thus still be correct to average them?

If not, is there some other way to modify method 2, to make it correct? For example by using a different formula when computing the error on $\bar{g}$?

Also: Would both ways technically be unbiased estimators for g?

Related but sort of implies the opposite of what my professor said: When to average in the lab for indirect measurements?