In their treatise "Principles of Optics", Max Born and Emil Wolf state in the section on coherence length (page 317, para 1) that:

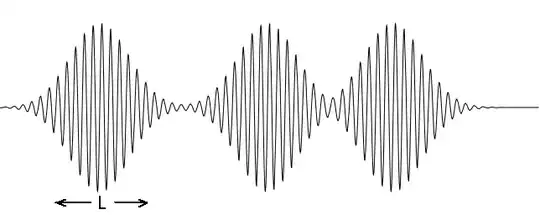

...... as the difference of optical path is increased, the visibility of fringes decreases (in general not monotonically), and they eventually, disappear. We can account for this disappearance of the fringes by supposing that the light of the spectral line is not strictly monochromatic, but is made of wavetrains of finite length ...........

I really can't understand what is the meaning of the emphasized line, does it refer of a light pulse of finite length, similar to a pulse going down a string (like the ones we see in video games!) or the width of the spectral line? Please enlighten me!