Here's some classical background to this thought experiment. Let us first consider a classical scale ruler (called A) of a continuous classical variable. Rulers must be discretised. Let's compare this ruler to one which is 100 times finer (called B) and plot random measurements A = x as a distribution in B. This distribution will come out to be a (slightly smoothed off) rectangle whose width is the interval in A. In order to plot this measurement as a continuous variable, one would therefore naturally represent each measurement using a 'bar graph'. In some sense, every ruler no matter how precise has this uncertainty. However this uncertainty is artificial in that there is classical 'information' about the measurement that the experimenter chooses to ignore.

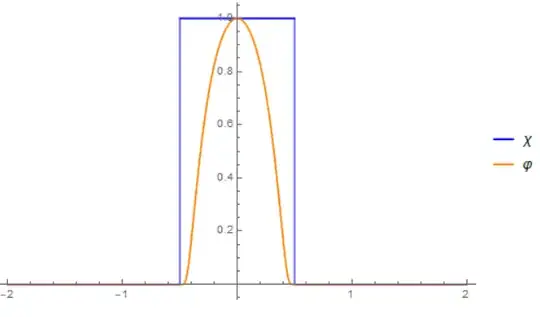

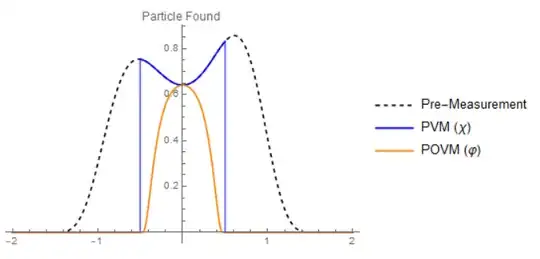

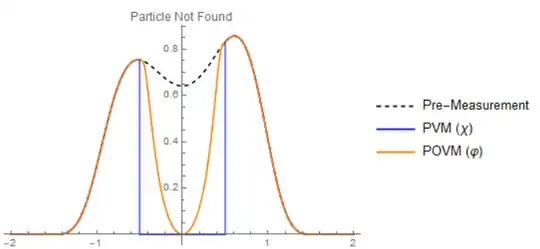

Now consider the same variable for a quantum wavefunction. The wavefunction is confined to a 1d line and we shall consider how the graph of $\lambda_i$ (eigenvalue of state) on x-axis and $P_i$ (probability) on the y-axis changes. Let's introduce quantum mechanical ruler A, this ruler deliberately scrambles (though this might be difficult to conceive how) classical information about the position so that upon collapse of the wavefunction, the distribution in $\lambda_i$ is a gaussian or random (perhaps a product of these with the original distribution) distribution around the actual measurement $\lambda_a$, which is degenerate in $i$. This can be proven if we introduce a 'perfect' quantum ruler B instantaneously after A and note the distribution in $\lambda_i$.

Now the question is, what is the Hermitian operator of quantum mechanical ruler A? Certainly, if it is simply the original operator discretised by $d\lambda$ then the claim is that the wavefunction collapses into a projection onto the eigenspace (in particular it is a normalised product of a random distribution length $d\lambda$ with the original distribution). However, isn't it more likely for the wavefunction to collapse to something smoother?

The crux of this thought experiment is that all quantum mechanical rulers must in a sense scramble information about the observable. There is no such thing as a perfect ruler. Therefore the corresponding Hermitian operators must be perturbed slightly. The question of what these collapses look like or how they occur can only be answered by introducing better and better quantum mechanical rulers. The question of how sharp this collapse is and what constitutes a perfect ruler has not been answered. This is because the question of what constitutes 'information' about an observable has never been answered. Why is a screen a position ruler, and not a momentum 'ruler'?