I read the wiki article about angular resolution, but I struggle to understand the image sensors' role in telescopes. Will better image sensors can help go beyond the diffraction point? If not, how to find the largest pixel size of an image sensor that will not prevent the telescope from operating at the diffraction level?

1 Answers

The best possible resolution* which can be reached is given by the Rayleigh criterion

$$\theta = 1.22 \frac{\lambda}{D} \text{,}$$

where $\theta$ is the angular resolution, $\lambda$ the wavelength of the used light and $D$ the diameter of the collecting lens.

On the photodetector the image of the point spread function will have a diameter of

$$d = \frac{\lambda}{2 \, \text{NA}}$$

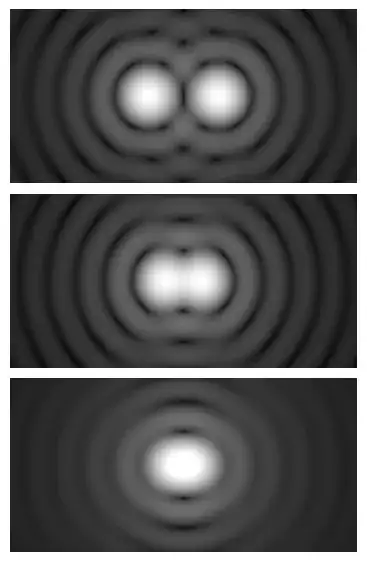

with $\text{NA}$ being the numerical aperture of the light cone hitting the detector. If there are no abberations the point spread function for a circular aperture looks like this:

The pixel size of the detector should be smaller than the central spot, otherwise you lose resolution.

Imagine pixels which are 5 times larger than the points spread function. You would see 1 pixel with some intensity on it, but you can't tell where on the pixel it impinges.

Very small pixels don't help you improving the resolution. Imagine two point-like objects, each one resulting in a point-spread function on the detector:

The minimum distance at which you can tell them apart doesn't depend on how many pixels you use. For further information see Could Legolas actually see that far? and answers therein.

* Putting aside superresolution tricks, which usually have restrictions or requirements.

- 3,408