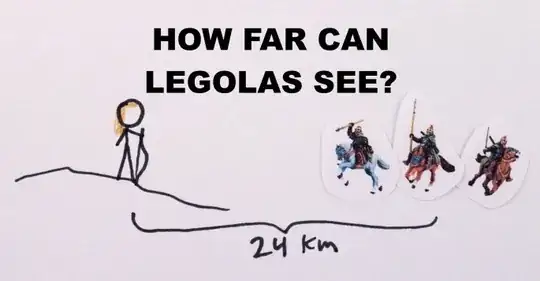

Take the following idealized situation:

- the person of interest is standing perfectly still, and is of a fixed homogeneous color

- the background (grass) is of a fixed homogeneous color (significantly different from the person).

- Legolas knows the proprotions of people, and the colors of the person of interest and the background

- Legolas knows the PSF of his optical system (including his photoreceptors)

- Legoalas know the exact position and orientation of his eyes.

- Assume that there is essentially zero noise in his photo receptors, and he has acccess to the ouptut of each one.

From this, Legolas can calculate the exact response across his retina for any position and (angular) size of the person of interest, including any diffraction effects. He can then compare this exact template to the actual sensor data and pick the one that best matches -- note that this includes matching manner in which the response rolls off and/or any diffraction fringes

around the border of the imaged person (I'm assuming that the sensor cells in his eyes over-sample the PSF of the optical parts of his eyes.)

(To make it even more simple: it's pretty obvious that given the PSF, and a black rectangle on a white background, we can compute the exact response of the optical system -- I'm just saying that Legolas can do the same for his eyes and any hypothetical size/color of a person.)

The main limitations on this are:

- how many different template hypotheses he considers,

- Any noise or turbulence that distorts his eyes' response away from the calculable ideal response (noise can be alleviated by integration time),

- His ability to control the position and orientation of his eyes, i.e. $2m$ at $24km$ is only $0.01$ radians -- maps to $\approx 0.8\mu m$ displacements in the position of a spot on the outside of his eyes (assumed $1cm$ eyeball radius).

Essentially, I'm sketching out a Bayesian type of super-resolution technique as alluded to on the Super-resolution Wikipedia page.

To avoid the problems of mixing the person with his mount, let's assume that Legolas observed the people when they were dismounted, taking a break maybe. He could tell that the leader is tall by just comparing relative sizes of different people (assuming that they were milling around at separations much greater than his's eye's resolution).

The actual scene in the book has him discerning this all while the riders were mounted, and moving -- at this stage I just have to say "It's a book", but the idea that the diffraction limit is irrelevant when you know alot about your optical system and what you are looking at is worth noting.

Aside, human rod cells are $O(3-5\mu m)$ -- this will impose a low-pass filtering on top of any diffraction effects from the pupil.

A Toy Model Illustration of Similar Problem

Let $B(x; x_0, dx) = 1$ for $x_0 < x < x_0+dx$ and be zero other wise; convolve $B(x; x_0, dx_1)$ and $B(x; x_0, dx_2)$, with $dx_2>dx_1$, with some known PSF; assume that this the width of this PSF if much much less than either $dx_1, dx_2$ but wide compared to $dx_2-dx_1$ to produce $I_1(y), I_2(y)$. (In my conception of this model, this is the response of a single retina cell as a function of the angular position of the eye ($y$).) I.e. take two images of different sized blocks, and align the images so that the left edges of the two blocks are at the same place. If you then ask the question: where do the right edges of the images cross a selected threshold value, i.e. $I_1(y_1)=I_2(y_2)=T$

you'll find that $y_2-y_1=dx_2-dx_1$ independent of the width of the PSF (given that it is much narrower than either block). A reason why you often want sharp edges is that when noise is present, the values of $y_1, y_2$ will vary by an amount that is inversely proportional to the slope of the image; but in the absence of noise, the theoretical ability to measure size differences is independent of the optical resolution.

Note: in comparing this toy model to the Legolas problem the valid objection can be raised that the PSF is not much-much smaller than the imaged heights of the people. But it does serve to illustrate the general point.