I'm trying to compute (numerically) the matrices of some simple quantum optical operations, which in principle are unitary. However, in my case they are unitary in an infinite-dimensional space, so I have to truncate them. The result is not necessarily unitary anymore, but if all the entries are correct up to the size that I choose, I'm happy.

So I compute the generator, truncate it to the size of my liking and then I exponentiate it, right? Nope. It doesn't work that way: the entries can be actually very wrong. In some cases they are almost correct, in some other cases they are all messed up.

Example 1: the beam splitter $\exp[i\theta(a^\dagger b + ab^\dagger)]$.

- compute $a$ and $a^\dagger$ up to dimension (say) $m$.

- multiply them with the kronecker product

- exponentiate

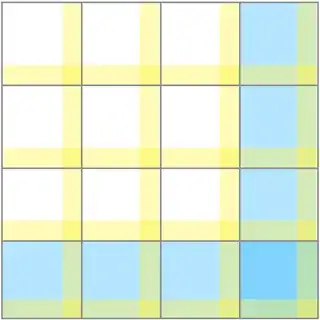

result: the entries are almost right, except for the last row and column of both spaces as in this figure (for $m=4$):

The only correct parts are the ones in the white spaces. In this case the solution is to truncate $a$ and $a^\dagger$ to size $m+1$ and then throw away the wrong rows/columns.

Example 2: the single-mode squeezer $\exp[\frac{1}{2}(z^*a^2-z{a^\dagger}^2)]$

This is all a mess: as I increase the size of $a$, the entries of the final result (which are correctly placed in a "checkerboard pattern") seem to converge to their correct values, but in order to have the first (say) 4x4 block somewhat correct I have to truncate $a$ to $m\approx 50$ and then truncate the result of the exponentiation to a 4x4 size.

Am I doing this the wrong way? Eventually I would like to produce the matrices of rather non-linear operations, where the $a$ and $a^\dagger$ operators are raised to large powers, how do I know if I'm doing it right?

UPDATE: In the first case (the beamsplitter) the unitary is in $SU(2)$, which is compact and admits finite-dimensional irreps. So I can exponentiate them individually and from those I can build the truncated unitary

In the second case (the squeezer) the unitary is in $SU(1,1)$ which is non-compact and in fact the Casimir operator has two infinite-dimensional eigenspaces: one corresponding to even and one to odd Fock states. Also for the two-mode squeezer the eigenspaces of the Casimir are infinite-dimensional (although countably infinite). So I can't use the multiplet method in this case.