Newton's Law of Universal Gravitation tells us that the potential energy of object in a gravitational field is $$U ~=~ -\frac{GMm}{r}.\tag{1}$$

The experimentally verified near-Earth gravitational potential is $$U ~=~ mgh.\tag{2}$$

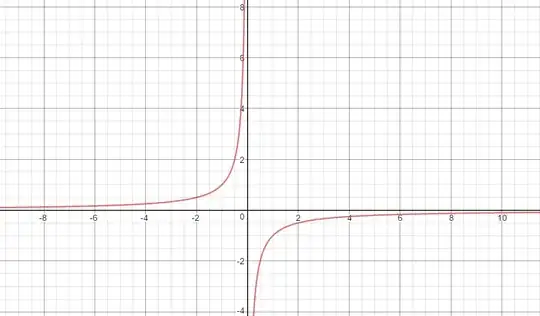

The near-Earth potential should be an approximation for the general potential energy when $r\approx r_{\text{Earth}}$, but the problem I'm having is that they scale differently with distance. $(1)$ scales as $\frac 1r$. So the greater the distance from the Earth, the less potential energy an object should have. But $(2)$ scales proportionally to distance. So the greater the distance from the Earth, the more potential energy an object should have.

How is this reconcilable?