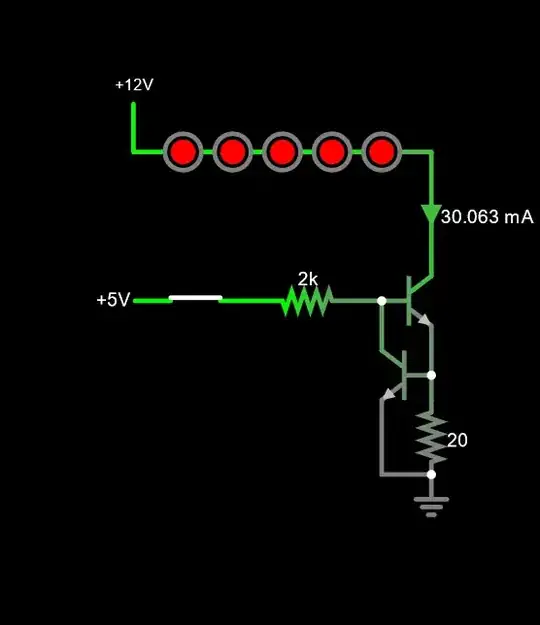

I'm facing to following problem: I need to turn on 5 LEDs (2 V, 30 mA) in series from a 12 VDC power supply using a MOSFET (2N7000G in this case). To calculate the resistor this formula was used:

12 V (power supply) - 5 x 2 V (series LEDS) = 2 V for resistor and MOSFET

From the datasheet: RDS(on) is 6 Ω: 6 Ω x 0.03 mA = 0.18 V voltage drop across the MOSFET.

2 V (from resistor and MOSFET) - 0.18 V (across MOSFET) = 1.82 V for resistor

U=IxR -> R=U/I -> R = 1.82/ 0.03 -> R = 60 Ω

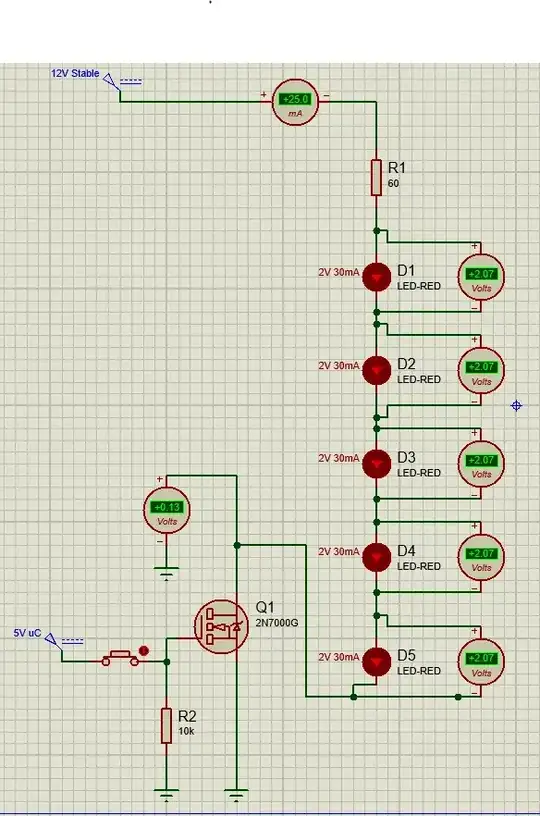

If the circuit is designed with a 60 Ω resistor, the LEDs are energized only with 25 mA (even if 60 Ω should provide 30 mA from my calculations). Also, the voltage across the LEDs is increased to 2.07 V each (in the LED simulation the properties used are 2 V and 30 mA; I double checked all of them).

My opinion is that 0.07 V makes my calculation wrong, but why does this happen? What did I do wrong?