I'm reading about Invalid Action Masking in RL in order to use it in my PPO algorithm for a specific task. The problem is that I read such explanations: here, here and here the there the invalid action is choosen based on a state of the environment. My question is: is it possible to determine the invalidity of an action based on the reward?

I want to make it clear based on a simple example. Let's take the old good Cartpole environment from OpenAI:

And let increase the action space from two to three. So there basically three discrete actions: $a_{t} \in [0, 1, 2]$, where 0 means left, 1 means right, 2 means do nothing.

How do I determine, which action - based on the state - should be masked out?

#### UPDATE ####

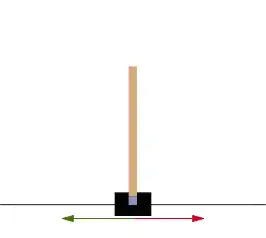

Thanks to @Neil-Slater for answering the question. Reading his answer I understood, that my question was missleading and I didn't get to the point. The example I provided above is too simplified and didn't reflect exactly my question. So I try again this time using the picture I posted already in this question here few days ago. In the picture a crane moves from the start position to the waypoint $a$ as a first move. Now... the agent could theoretically go directly to waypoint $d$ ignoring all the waypoints in between. So I thought to force the agent to move vertically at first by invaliding the actions along the $x, y$ axes.

Now... reading the answer from @Neil-Slater I can try to answer my question myself:

- The movement from start to $a$ does not require any invalidation of actions, because moving along the other axis is still permitted but not wanted. So the actions should not be masked at all. Reward should be enough.

- The movement form start to $a$ needs to be forced by using some masking of some sort, otherwise the agent would not learn a suitable policy for the vertical movement.

I hope, I could now express myself better and clearer