I have a question regarding the implementation of the REINFORCE algorithm.

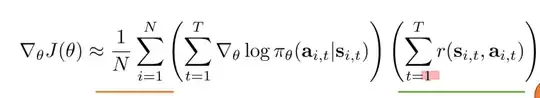

In berkeley course (see slide 9) the gradient is defined as

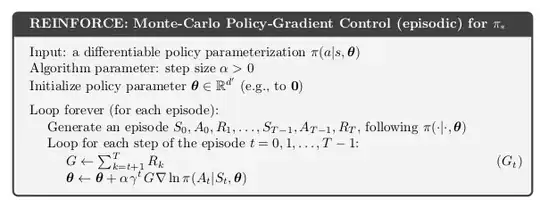

Note that the return sums from 1. However in Sutton's book the return sums from t+1

In fact in two popular implementations, pytorch's official example (See Line 71) and tianshou's example (See Line 226), if I understand correctly, they are computing

sum_t (∇log π(a_t | s_t) * R_t)

which seems to correspond to Sutton's definition rather than Berkeley's (which will becomes

(sum_t ∇log π(a_t | s_t)) * (sum_t r_t)

).

I wonder which definition is the de facto standard in the community. Thank you.