I am trying to understand the neural network architecture used by Ho et al. in "Denoising Diffusion Probabilistic Models" (paper, source code). They include self-attention layers in the model, applying them to the feature maps output by previous convolutional layers (ResNet blocks). I understand self-attention in the context of sequential data, but here there is no sequence of vectors, just a single image to be processed by self-attention. I do not understand what the self-attention layer is doing to the feature maps.

Question: please could you explain the function of the self-attention layers in this CNN?

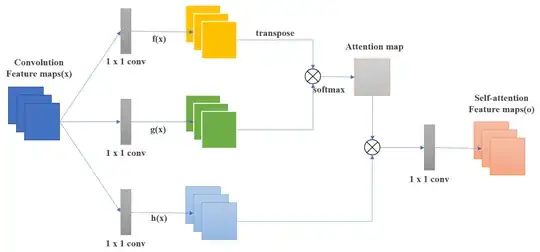

My guess is that the self-attention layer is treating pixels like sequence elements i.e. it uses keys/queries to find the pixels in the input feature map that are most relevant to the pixel at some spatial location in the input feature map, then forms a relevance weighted sum of these pixels (or rather their corresponding values) as the output at that location. But this perspective doesn't seem to be consistent with the mechanics of the operation. In sequential data, we have Q, K, V matrices which store embeddings pertaining to the different sequence elements as different rows in the matrices, and it seems that we're replacing these with Q, K, V matrices that are derived from a single image. So when we e.g. calculate $QK^\top$ we are not computing the dot-product similarity of the queries and keys of different elements=pixels, because a row of $Q$ and a row of $K$ does not correspond to one element=pixel in the image (contrast to sequential data where they would correspond to specific sequence elements).

Note: from reading the paper/source code, I think that the self-attention operation is working according to the diagram below, but please correct me if this isn't the case.

(Figure reproduced from this paper and a similar set-up can be found in the self-attention GAN paper.)

(Figure reproduced from this paper and a similar set-up can be found in the self-attention GAN paper.)