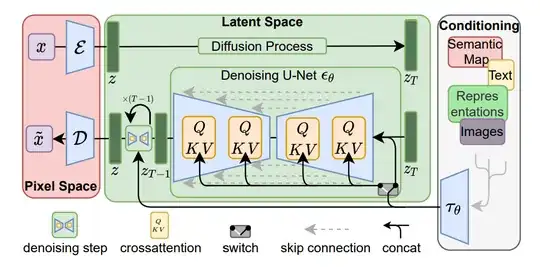

Consider the following diagram from the paper titled High-Resolution Image Synthesis with Latent Diffusion Models by Robin Rombach et. al.,

In the context of this diagram, I'm uncertain about the functionality of a particular component referred to as the "switch." Based on my understanding, the conditioning information always flows to the denoising step and is directed either to the cross-attention module or to concatenate with $z_{T}$, but not to both simultaneously. Is my understanding correct? Could you explain why the conditioning information cannot be passed to both components at the same time? Is there a specific reason or mechanism described in the paper for this design choice?