I am training a one-layer unidirectional vanilla GRU on a next item prediction task with regard to the last 10 interacted items. In my original experiment, where I trained on approx. 5.5M samples and validated on around 1M samples, I saw periodic fluctuations in my loss/accuracy curves. Now I made a few experiments with a far smaller dataset (train: 250K, val: 10K) and compared different learning rates. I still saw this effect and I would like to know what causes it, just to understand if it is an issue or not.

I am evaluating every 25% per epoch, so 4 times per epoch. I accumulate the loss and the hit-ratio for the processed training batches and when it is time for evaluation, based on these I compute train metrics and then compute the validation metrics on the entire validation set.

I am using Adam as optimizer.

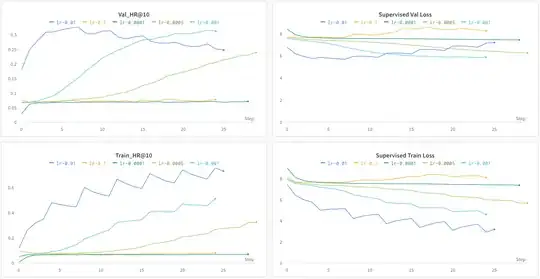

The plots show HR@10 (=accuracy for top-10 predictions) and CrossEntropyLoss for train and val. One step is referring to 25% of an epoch, so step 0 = 25% of first epoch, step 4 = 25% of second epoch done. You can see that the phenomenon gets more extreme for higher learning rates. The spike in training HR/loss always happens at the first evaluation during an epoch (so 25%). It is paired with a drop in validation accuracy, which gets better for the next three steps (50%, 75% 100% of epoch). Then falls again when spike in training accuracy occurs.

Things I excluded already:

- data gets fed randomly, it is not always the same order

- checked whether gradient is accidentally not reset before calling

backward() - possible wrong calculation of loss/accuracy due to wrong number of batches I divide by

I would appreciate any help to understand what is happening with the model. It still seems to work but I just don't understand what causes this behavior.