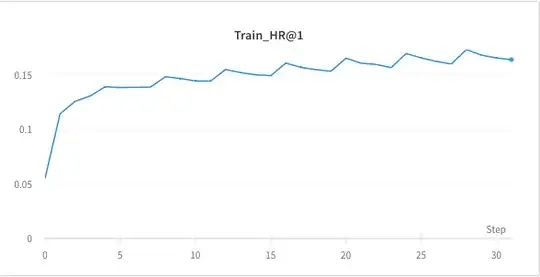

I am training a neural network (specifically a GRU based architecture but I think this is not too relevant for the question). My loss curves, especially the training loss but also the validation loss, show periodic fluctuations and I try to understand why (see picture). I am evaluating the model 4 times per epoch (25%, 50%, 75%, 100%) to monitor training progress, dataset consists of roughly 5.5M sample sequences (the task is item recommendation). As you can see from the picture, the fluctuations are exactly 4 steps long (= one epoch). The metric on the picture is the Hit-Ratio for top-1 predictions so just accuracy on the train set.

The only reason I could think of that would explain these periodic fluctuations would be, that the data is fed to the model in the same order each epoch leading to similar learning signals at similar parts of the epoch. But I load the training data using PyTorchs DataLoader with shuffle=True, so this does not seem to be the reason.

Can you think of any other reasons for such a behavior?