In the paper Salient Region Detection and Segmentation, I have a question pertaining to section 3 on the convolution-like operation being performed. I had already asked a few questions about the paper previously, for which I received an answer here. In the answer, the author (JVGD) mentions the following:

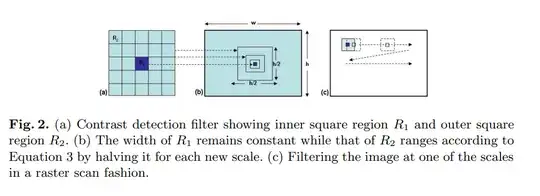

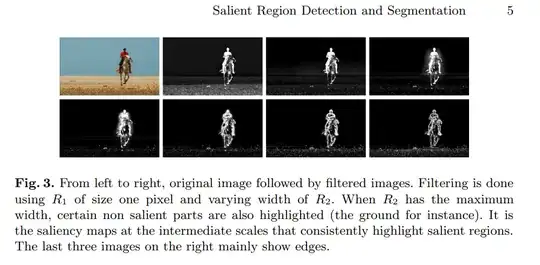

So for each pixel in the image you overlap on top $R_{1}$ and then $R_{2}$ on top of $R_{1}$, then you compute the distance $D$ for those 2 regions to get the saliency value of that pixel, then slide the $R_{1}$ and $R_{2}$ regions in a sliding window manner (which is basically telling you to implement it with convolution operation).

Regarding the above, I had the following question: If the region $R_{2}$ moves in a sliding window manner, won't the saliency map (mentioned in section 3.1) have a smaller size than the original image (like in convolution the output image is smaller)? If this is so, wouldn't it be impossible to add the saliency maps at different scales since they each have different sizes?

The following edit re-explains the question in more detail:

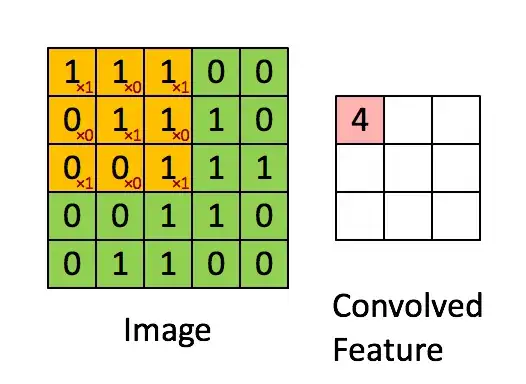

In the animation above, you can see a filter running across an image. For each instance of the filter, some calculations are happening between the pixels of the filter and the image. The result of each calculation becomes one pixel in the output image (denoted by "convolved feature"). Here, the output image is smaller than the input image because there are only 9 instances of the filter. From what I understood of the salient region operation, a similar process is being followed i.e. a filter runs across an image, some calculations happen, and the result of each calculation becomes one pixel in the output image (saliency map). Hence, won't the saliency map have a smaller size than the original image? Furthermore, when the filter size is 3 x 3, the output image size is 3 x 3. However, if the filter size was 5 x 3, the output image size would only be 1 x 3. Clearly, the output image size is different for different filter sizes. This makes the output images (saliency maps) impossible to add. There is clearly something I am missing / misunderstanding here, and clarity on the same would be much appreciated.

P.S. There is no indication of padding or any operation of that sort in the research paper, so I don’t want to assume anything because the calculations would then be wrong.