I'm following this toric code tutorial, where they constructed the $X$-logicals matrix using the Künneth theorem.

I'm confused about why they specifically used $X$-logicals when only $Z$ errors were allowed to occur.

I used the following code to create the $Z$-logicals matrix manually.

def toric_code_z_logicals(L):

n_qubits = 2 * L * L # Total number of qubits in the toric code lattice

matrix = dok_matrix((2, n_qubits), dtype=int) # Two logical Z operators

# Logical Z Operator 1: Mark every L-th qubit starting from the first qubit

logical1_indices = [i * L for i in range(L)]

# Logical Z Operator 2: Mark all qubits in the last row of the lattice

logical2_start = n_qubits - L # Starting index of the last row

logical2_indices = [logical2_start + i for i in range(L)]

# Fill in the matrix for the two logical Z operators

for idx in logical1_indices:

matrix[0, idx] = 1

for idx in logical2_indices:

matrix[1, idx] = 1

return matrix.todense()

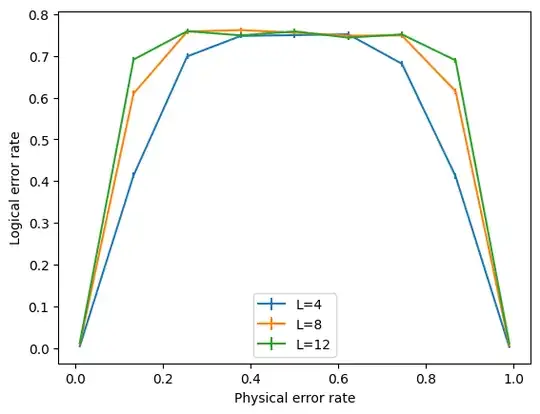

I tried using $Z$-logicals instead but ended up with the following graph that looks like a bell curve when plotting the physical vs logical error graph using physical errors up to one.