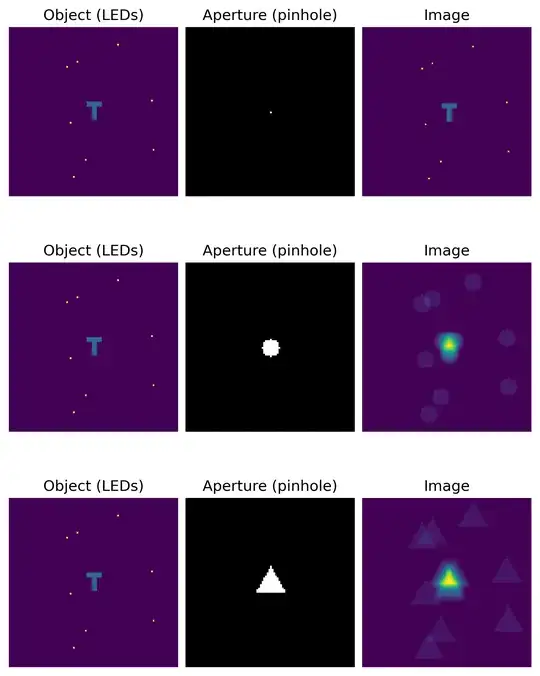

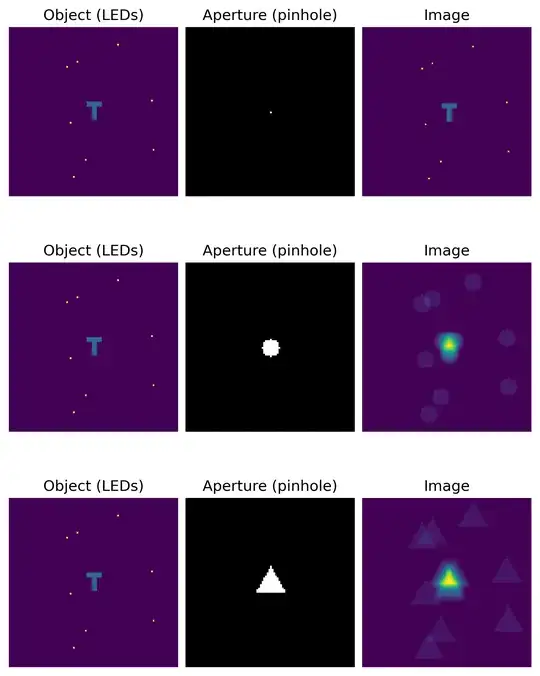

With big holes it still works. The image quality just degrades quickly. Under some simplifying assumptions (ray optics and incoherent light if you are interested), the image of a pinhole camera can be calculated using a convolution of the object and the pinhole. This might be abstract, but it just means that the image is a smeared out version of the object, and the amount of smearing is determined by the size of the aperture. Here's what that looks like in the image below. The 'object' is a bunch of point sources (small LEDs) and the letter T in the center. In the third row you can see that the shape of the aperture can be recognized in the image because of how convolutions work.

Here is the code if you want to play around:

# %%

import numpy as np

import matplotlib.pyplot as plt

import scipy

%%

n = 100

n_LEDs = 8

x = np.arange(-n // 2, n // 2)

y = np.arange(-n // 2, n // 2)

X, Y = np.meshgrid(x, y)

Start with the letter T

obj = (((np.abs(X) < 2) & (np.abs(Y) < 5))) + (

(np.abs(Y + 5) < 2) & (np.abs(X) < 5)

)

obj = obj.astype(int)

Add some LEDs (point sources)

for l in range(n_LEDs):

i, j = np.random.randint(n * 0.1, n * 0.9, size=2)

obj[i, j] = 3

Define the point spread functions (which happen to be the same as apertures

for incoherent light in the ray optics regime)

PSF_smallpinhole = (X == 0) & (Y == 0)

PSF_largepinhole = X2 + Y2 <= 5*2

PSF_triangle = (

((np.cos(np.pi / 6) X + np.sin(np.pi / 6) * Y) >= -5)

& ((-np.cos(np.pi / 6) * X + np.sin(np.pi / 6) * Y) >= -5)

& (-Y >= -5)

)

PSF_list = [PSF_smallpinhole, PSF_largepinhole, PSF_triangle]

fig, axes = plt.subplots(ncols=3, nrows=3, figsize=(6, 8), dpi=300,

layout="constrained")

for PSF, axrow in zip(PSF_list, axes):

# Create the image by convolving object and PSF

image = scipy.signal.convolve(PSF, obj, mode="same")

axrow[0].imshow(obj)

axrow[0].set_title("Object (LEDs)")

axrow[0].axis("off")

axrow[1].imshow(PSF, cmap="gray")

axrow[1].set_title("Aperture (pinhole)")

axrow[1].axis("off")

axrow[2].imshow(image)

axrow[2].set_title("Image")

axrow[2].axis("off")

plt.show()