I concur: $\eta(t)/dt$ is dependent on $\eta(t)$ anyway.

Referring to the fact that the additive function $\eta(t)$ must be zero at the end points $t_1$ and $t_2$ is superfluous.

[Later edit: contributor Amit checked against the audio record, and the remark about the end points is not present in the audio record. See the answer by Amit.]

I will first discuss why it is that the process of integration by parts allows the derivation to proceed to its intended goal.

After that I will discuss the reasoning presented by Feynman

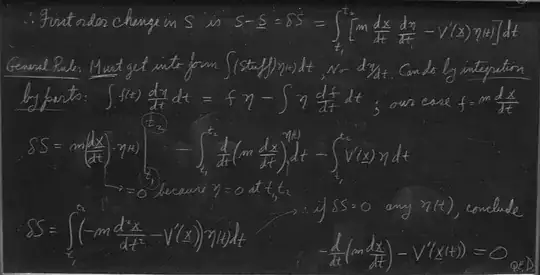

The variational equation:

$$ \delta S=\int_{t_1}^{t_2}\biggl[

m\,\frac{d\underline{x}}{dt}\, \frac{d\eta}{dt}-\eta V'(\underline{x})

\biggr]dt \tag{1} $$

In order to bring the variational equation to a form that can be solved two elements must be dismissed: the variational addition $\eta$, and the integration.

Dismissal of the variational addition $\eta$ is expected, of course. For comparison the procedure with differential calculus. Add a small quantity to $x$: ($x + h$), and then work towards a form where you can take the limit of $h$ going to infinitesimally small.

In the case of processing the variational equation: processing it has a dual goal: to allow the variational addition $\eta$ to disappear, and to dismiss the integration.

We have: at the end of the derivation the resulting equation is a differential equation. The reason for transforming to a differential equation: the differential equation is solvable.

In the form as stated above the integration cannot be dismissed, because the expression contains both the function $\eta(t)$ and its time derivative: $d\eta(t)/dt$.

In preparation I formulate a lemma:

As you sweep out variation: the piece of information that you need is the derivative of the action at the point in variation space where the trial trajectory coincides with the true trajectory. Lemma: when two curves coincide they have the same derivative.

The product rule of differentiation:

$$ \frac{d}{dt}(\eta f)= \eta\,\frac{df}{dt} + f\,\frac{d\eta}{dt} \tag{2} $$

In the process of integration by parts the relation expressed by (2) is used to transfer differentiation with respect to time from $\eta(t)$ to $m\,\tfrac{d\underline{x}}{dt}$.

That is, the differentiation with respect to time is not eliminated: it is transferred from $\eta(t)$ to $m\,\tfrac{d\underline{x}}{dt}$.

The statement below outlines the differentiation transfer:

$$ m\,\frac{d\underline{x}}{dt}\,\frac{d\eta}{dt}

\quad \Leftrightarrow \quad

\frac{d}{dt}\biggl(m\,\frac{d\underline{x}}{dt}\biggr)\eta(t) \tag{3} $$

That raises the question: how is it valid to transfer the differentiation? Well, what is being evaluated is the point in variation space where the trial trajectory coincides with the true trajectory. Same curve, same derivative.

The result:

$$

\delta S=\int_{t_1}^{t_2}\biggl[

-m\,\frac{d^2\underline{x}}{dt^2}-V'(\underline{x})

\biggr]\eta(t)\,dt \tag{4}

$$

In the above equation the terms have already been rearranged to have the core expression inside the square brackets, and all auxillary elements outside it.

(4) is at the point where the integration can be dismissed.

Feynman's presentation

Feynman offers the following consideration:

[...] there is a connection between η and its derivative; they are not absolutely independent, [...]

Feynman suggests that there's a problem there, but the very thing that makes it possible to deploy integration by parts is the fact that $d\eta(t)/dt$ and $\eta(t)$ are connected. The integration by parts capitalizes on the are-related-by-differentiation property. The $d\eta(t)/dt$-and-$\eta(t)$-are-connected circumstance isn't a problem, it's an asset.

General discussion

Differential equations form a special category of equation.

Let me contrast differential equations and the type of equation for finding the root(s) of a function. When you solve a root finding equation the solution is one or more numbers. When a differential equation is solved the solution is a function. That is: the solution space of differential equations is a space of functions.

We have: for a differential equation the demand is that the stated differential relation must be satisfied for the whole domain concurrently. That is: the demand expressed by the differential equation is intrinsically a global demand.

Variational equations have that global property too. The solution to a variational equation is a function.

That shared property of being a global type of equation is what makes it possible to transform a variational equation into a differential equation.

About global and local

The following is quoted from the chapter that triggered the question:

Feynman Lectures, Volume II, chapter 19

Note:

I have applied the following change: where it said in the original text 'a minimum' has been replaced with: 'stationary', for the purpose of aligning with the name: Hamilton's stationary action.

"Now I want to say some things on this subject which are similar to the discussions I gave about the principle of least time. There is quite a difference in the characteristic of a law which says a certain integral from one place to another is stationary—which tells something about the whole path—and of a law which says that as you go along, there is a force that makes it accelerate. The second way tells how you inch your way along the path, and the other is a grand statement about the whole path. In the case of light, we talked about the connection of these two. Now, I would like to explain why it is true that there are differential laws when there is a stationary action principle of this kind. The reason is the following: Consider the actual path in space and time. As before, let’s take only one dimension, so we can plot the graph of $x$ as a function of $t$. Along the true path, $S$ is stationary. Let’s suppose that we have the true path and that it goes through some point $a$ in space and time, and also through another nearby point $b$ Now if the entire integral from $t_1$ to $t_2$ is stationary, it is also necessary that the integral along the little section from $a$ to $b$ is also stationary. It can’t be that the part from $a$ to $b$ is a little bit more. Otherwise you could just fiddle with just that piece of the path and make the whole integral fit.

So every subsection of the path must also be stationary. And this is true no matter how short the subsection. Therefore, the principle that the whole path satisfies the stationary criterion can be stated also by saying that an infinitesimal section of path also has a curve such that it has stationary action."

To my knowledge Feynman is the only author to point out the above described property.

(Co-authors Edwin F. Taylor and Jozef Hanc mention it too, stating that they obtained the insight from Feynman's discussion.)

Crucial point:

The validity of the derivation is specifically set up to be independent of where the end points $t_1$ and $t_2$ are positioned.

$t_1$ and $t_2$ do not have to correspond to any particular point along the true trajectory of the physical system.

The whole point is: you can subdivide the trial trajectory any way you want; the validity of the derivation is independent of that.

This includes putting $t_1$ and $t_2$ arbitrarily close together, all the way down to infinitesimally close together; the validity of the derivation stands.

So you put $t_1$ and $t_2$ infinitesimally close together, with the demand that this infinitesimal criterion of stationary action must be satisfied for the whole domain concurrently.

The true trajectory satisfies the stationary action criterion on every infinitesimal subsection of the trajectory: that property propagates out to the trajectory as a whole.

Key:

The trajectory as a whole satisfies the stationary action criterion if and only if it is satisfied on each infinitisimal subjection of the trajectory concurrently.