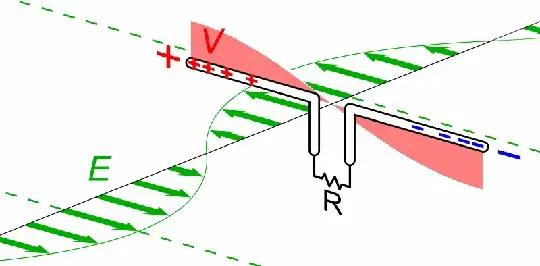

I can understand the qualitative argument of a recieving antenna becoming resonant: An external $E$-field causes the charges to move in a conductor and bunch up, creating a voltage. If the driving $E$-field's frequency is too high, the charges do not have enough time to seperate to the fullest. If the frequency is too low, it takes too long.

I am not convinced how the sweetspot of an antenna length of $L = \frac{\lambda}{2}$ arises mathematically, however.

In principle, only three equations should be needed to explain the system in one dimension and arrive at the supposed optimal length:

- Ohm's law: $$j = \sigma E \;\;\;,$$ which causes the current in the conductor created by the external sinusoidal field.

- The continuity equation: $$\partial_t \rho + \partial_x j = 0 \;\;\;,$$ which will then cause the charges to bunch up at the ends of the antenna (with the boundary condition $j=0$ at both ends).

- Coulomb's law: $$E = \frac{1}{4 \pi \varepsilon_0} \int_L \frac{\rho(x') (x-x')}{|x-x'|^3}\text{d}x' \;\;\;,$$ by which the bunched up charges create a field opposing their accumulation at the ends.

How does one show that this system will be optimal if the antenna length is $L = \frac{\lambda}{2}$ of the driving $E$-field? What does optimal mean in this case? (Maximum charge accumulation at the ends?)

I have searched in electrodynamics textbooks and RF textbooks, but could never find a rigorous mathematical derivation.