At university, we have been taught to use the following formula to calculate uncertainties, when the uncertainties are independent:

$(\Delta Q)^2 = (\frac{\delta Q}{\delta a})^2(\Delta a)^2+(\frac{\delta Q}{\delta b})^2(\Delta b)^2+...$

where $a, b$ etc are the variables in the formula for $Q$.

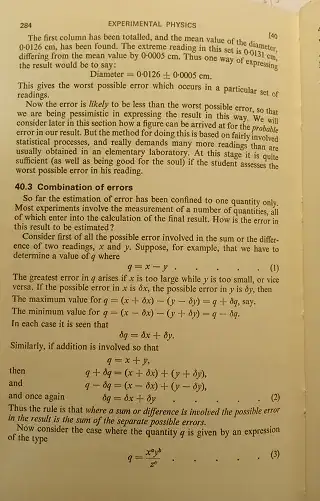

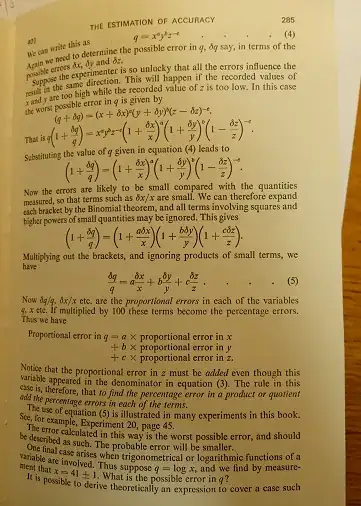

However, I am finding an alternative formula given in some physics laboratory manuals. Granted it does not give the error in $Q$ but rather the fractional error. However when I use this formula to calculate the uncertainty in a particular formula, the result I get is different from if I were to use the previous propagation of errors formula. Here is the alternative formula:

If $Q=\frac{a^{\alpha}b^{\beta}...}{p^{x}q^{y}...}$ then

$\frac{\Delta Q}{Q}=\alpha \frac{\Delta a}{a}+\beta \frac{\Delta b}{b}+...+x\frac{\Delta p}{p}+y\frac{\Delta q}{q}+...$.

It is obvious that these formulae don't always give the same uncertainty. Being a beginner in physics and uncertainties, the only explanation I can think of is that the latter formula gives the maximum possible uncertainty, whereas the first one is more refined and closer to the actual uncertainty?

What I find a bit strange though is that even our physics lab manual at university uses the latter formula. However whenever this occurs in the manual, our lab teacher tells us it is wrong and to use the first formula instead to calculate the uncertainty in the result!

Edit: Here is an example of the latter formula being given in a physics laboratory manual (C B Daish and D H Fender, Experimental Physics)