With open boundary conditions, you don't. The notion that there is some k-space wavefunction $u(k)$ is based on Bloch's theorem, whoich only works if the Hamiltonian is translation invariant. It is possible to 'cheat' and assume periodic boundary conditions, but ultimately, the Hamiltonian is only approximately translation invariant, and $k$ eigenstates so not coincide with energy eigenstates - $k$ is not a good quantum number.

An arbitrary real space Hamiltonian for the 1D chain with $M$ sites, for example, is written with respect to the basis of Fock states

$$\mathcal{F} = \{ |n_1, n_2, ... n_M\rangle | n_j \in \{0,1,2, ...\}\} $$

where $n_j$ is the number of (spin 0) bosons on the site $j$.

Note that this basis is infinite. However, matters are simplified by the fact that the Hamiltonian commutes with the total number operator $\hat{N} = \sum_j a^\dagger_j a_j$, meaning that its eigenvalue $N$ is a good quantum number.

It's therefore sufficient to diagonalise within an $N$-eigenspace, i.e. over the subspace

$$\left\{ |\mathbf{n} \rangle \in \mathcal{F} \Bigg| \hat{N} |\mathbf{n} \rangle = \sum_{j=1}^M n_j = N \right\}$$

This is the canonical "real space" basis, for example with $M=4, N=1$ you would have a 4D basis

$\{|0001\rangle, |0010\rangle, |0100\rangle, |1000\rangle \}$. Higher-$N$ fillings consist of the $N$th tensor powers of this single particle basis.

Assuming periodic boundary conditions (PBC) and the notational convention $a_{M+1} =a_1$

one can show that the (unitary) lattice translation operator

$\hat{T}_1 = \sum_{j=1}^M a^\dagger_{j+1}a_j$ commutes with its inverse, and therefore with the Hamiltonian. Writing $\hat{T}_n$ to mean $(\hat{T})^n$, where $n\in\mathbb{Z}$, the eigenvalues of $\hat{T}_n$ must have the form $\exp(ik n)$ for a constant real number $k$. Since $T$ is unitary, its eigenkets are orthogonal - these orthogonal eigenkets are what you're trying to find, since they should render the Hamiltonian diagonal (or at least greatly simplified).

This is Bloch's theorem.

I want to stress that this $k$ quantum number is not in itself defined to be the eigenvalue of a Hermitian operator - this is only possible in non-lattice systems, where you can shrink the translation operator to be infinitesimal, in which case it is precisely momentum.

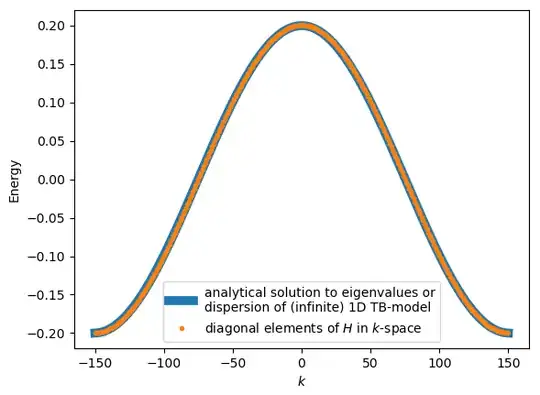

The only condition on $k$ comes from the aforementioned periodic boundary conditions: since $T_{M} = \hat{1}$,$e^{ikM} = 1$, meaning that the $M$ distinct $k$ values are $k = 2\pi m/M, m \in \mathbb{Z}_M$. Our job is to numerically find a way of expressing the real space (single particle) eigenkets $\{|100...0\rangle, ... ,|000...01\rangle \}$ in a basis that is diagonal with respect to the elementary translation operator $T_1$.

"Fourier transforming" the Hamiltonian then corresponds to re-expressing the Hamiltonian in the basis of $\hat{T}_1$ eigenstates. This is easy enough: write $|n_j\rangle$ to mean the Fock state with a particle on site $j$. We seek coefficients $A_{pj}$ such that

$$\hat{T}_1 \sum_{j=1}^M A_{pj}|n_j \rangle = \exp(2\pi i p /M) \sum_{j=1}^M A_{pj}|n_j \rangle$$

$$\Rightarrow \sum_{j=1}^M A_{p,j}|n_{j+1} \rangle = \exp(2\pi i p /M) \sum_{j=1}^M A_{pj}|n_j \rangle$$

This is solved by $A_{pj} = \exp(-\frac{2\pi i p j }{M})$, which (with some massaging) looks like the Fourier transform used by v-joe. Then the new basis of "crystal momentum" eigenstates can be written (via $k_p = 2\pi p/m$) as $|k_p\rangle = A_{pj}|n_j\rangle = \sum_j \exp(-ikj)|n_j \rangle$. Changing the Hamiltonain basis is then the same as for any other matrix, using the change of basis matrix $A$. (v-joe's answer uses this, and is probably more practically helpful.)

To do this, we have used no assumptions other sites being regularly spaced. In fact, $T_1$ is still well defined for open boundary conditions (it just "teleports" the last site to the first). The catch is that $T_1$ no longer commutes with the Hamiltonian, meaning that the idea of an energy vs. k "band structure" is no longer well defined.

So what's going on? Experiments can clearly measure band structures on finite crystals. The standard argument is that the bulk of a material is locally translation invariant, and experimental probes of $k$ space can usually only see above some finite minimum value of $k$ of order $1/L$, the length scale of the crystal in question. So even if $T_1$ is not an exact symmetry of the system, it's close enough for most practical purposes.

However, there are important exceptions to this hand-waving argument. In so-called "topological" materials, there can also be non-trivial states located near the edge of a material that the $k$ space approximation misses. Such states can only be seen by directly diagonalising the real-space Hamiltonian.