I'm aware of the usage of special lenses known as Telecentricity but these are still, ultimately, lenses, and suffer from loss of focus at a certain range:

It is a common misconception that Telecentric Lenses inherently have a larger depth of field than conventional lenses. While depth of field is still ultimately governed by the wavelength and f/# of the lens, it is true that Telecentric Lenses can have a larger usable depth of field than conventional lenses due to the symmetrical blurring on either side of best focus.

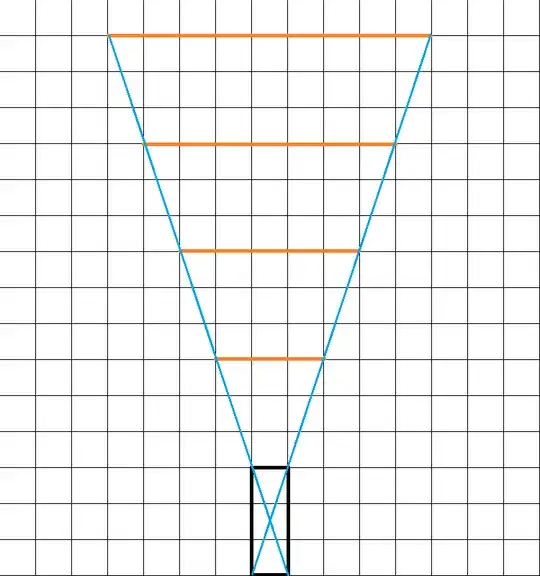

We already have sensors that can detect color so what's to stop us from putting one of those sensors at the end of a very long, thin tube coated in Vantablack allowing light to enter only from directly ahead of the tube, generating a "pixel", which, with tens of thousands of these sensors in a grid formation, could seemingly create a truly orthographic image of whatever light is directly in front of the sensors. In space, while it would be similar to looking at the universe through a pinhole, perhaps a 5 meter x 5 meter square visible at a time with millions of the sensors, it seems like such a tool would still be useful.

It seems like you would then be able to see 25 feet at a time of any object at any distance, in color, with good clarity at true-size. But that sounds too good to be true. What factors of physics would make such a device unrealistic?