Let's say you place an electric field meter some distance from a light bulb.

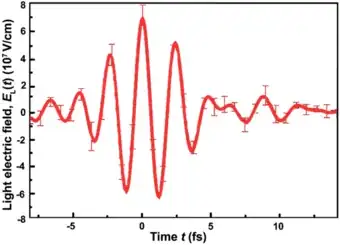

As a function of time the output of the meter would be $\mathbf{E}(t)$. I would guess that the electric field will be some very rapidly fluctuating random-looking function.

My question is, what determines the timescale of these fluctuations in $\mathbf{E}(t)$?

In my understanding, the light from a light bulb is emitted by electrons in the atoms in the filament jumping up and down between energy levels. Each de-excitation emits a photon. I am imaging each of these photons as a traveling localized wavepacket. The filament is made up of $\sim10^{23}$ atoms and they're all emitting these wavepackets independently of one another. So I guess the total E-field is the sum of a prodigious number of these wavepackets added together with random phases.

What determines the timescale of the individual wavepackets? The average lifetime of an excited state? What's the back-of-the-envelope way of estimating that? How many of these wavepackets does one atom emit per second? Finally, what determines the time scale of fluctuations of the sum of a ton of these wavepackets?

I'm looking for a solid physical picture of what's going on that can let me calculate some numbers (order of magnitude estimates). Also, what is right terminology that people use when talking about this?