I know this question was posted 10 years ago. I checked your user profile, and by the looks of it you are still visiting physics.stackexchange, so I decided to go ahead and post this answer.

Indeed it is possible to derive the Euler-Lagrange equation using differential operations only.

In Oktober 2021 I posted an answer with a discussion of Hamilton's stationary action, with among other things a derivation of the Euler-Lagrange equation using differential operations only.

The reason why that is possible was actually recognized long before Calculus of Variations was developed.

As we know, Johann Bernoulli issued the Brachistochrone challenge in the 1690's. (In retrospect it is recognized that the Brachistochrone problem is the type of problem that calculus of variation is suited to. Actual development of calculus of variations occurred in the 1780's)

Among the few mathematicians of the time that were able to solve the Brachistochrone problem was Jacob Bernoulli, Johann's older brother.

Jacob Bernoulli recognized a crucial feature of the brachistochrone problem, and he presented that feature in the form of a lemma:

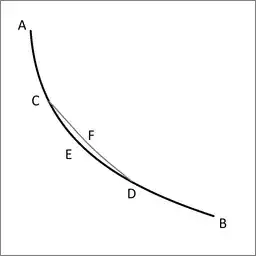

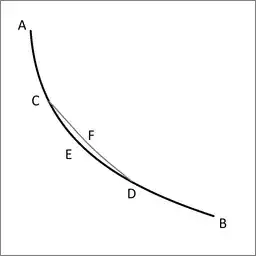

Let ACEDB be the desired curve along which a heavy point falls from A to B in the shortest time, and let C and D be two points on it as close together as we like. Then the segment of arc CED is among all segments of arc with C and D as end points the segment that a heavy point falling from A traverses in the shortest time. Indeed, if another segment of arc CFD were traversed in a shorter time, then the point would move along AGFDB in a shorter time than along ACEDB, which is contrary to our supposition.

(Acta Eruditorum, May 1697, pp. 211-217)

Rephrasing:

Take the solution to the Brachistochrone problem, and take an arbitrary subsection of that curve. That subsection is also an instance of solving the brachistochrone problem. This is valid at any scale; down to arbitrarily small scale.

Therefore the following strategy will work:

Divide the curve in concatenated subsections, and formulate an equation that is valid for all the subsections concurrently.

Generalizing:

In order for the derivative of $\int_{x_2}^{x_1}$ to be zero: divide the domain in concatenated subsections, and set the condition: for every subsection between $x_1$ and $x_2$ the derivative of the corresponding integral must be zero concurrently. Take the limit of infinitisimally short subsections.

When you see the Euler-Lagrange equation being derived using integration by parts your first reaction is: "Hang on, this derivation results in a differential equation, what happened to the integration?"

The reason the integration operation can be eliminated: calculus of variations is about infinitisimals to begin with.

The condition that the derivative must be zero applies for sections down to infinitisimally small length, and from there it applies for concatenated subsections concurrently.

Concatenable

Jacob's lemma, and the generalized form of it, are applicable when the problem is such that it can be subdivided in concatenable subsections.

In mathematics there are also classes of problems such that subdivision in concatenable subsections is not available. An example of that is the traveling salesman problem.