I would like to know how far field traveling electromagnetic radio waves separate from the near field non-traveling electromagnetic fields at a radio transmitter antenna. How is a non-traveling electromagnetic field converted into a traveling electromagnetic field?

3 Answers

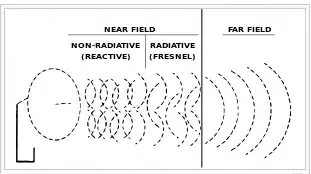

You are confused. The near-field and far-field components are both present at all distances from the antenna. The difference is that whilst the near-field component(s) fall of in amplitude as $\sim r^{-2}$, the far-field components fall off as $r^{-1}$. In other words, they co-exist, but one can define three regions: one where the near-field dominates (at small $r$), one where the far-field dominates (at large $r$) and a third where they are of comparable magnitude and must both be considered.

There is no black & white dividing line between these regions - it depends on the purpose of your calculations/investigations. Typically one says that the far-field radiation field dominates if $r \gg \lambda/2\pi$ (where $\lambda$ is the wavelength of the far-field radiation) and if $r \gg l$, where $l$ is the dimension of the antenna. What $\gg$ means is entirely a matter for you.

- 141,325

I read a bit the wiki article on near and far field and antennas.

To start with an electromagnetic wave always travels with velocity c , no matter how the interference patterns of continuous emissions appear.

An analogy: When one is near a lamp one can see the emitting wires or diodes, which means that it is not a plane wave of light but it has a particular structure , at a distance the light source follows the 1/r^2 law of dispersion from a point source and can be described by a spherical wave.

I would like to know how far field traveling electromagnetic radio waves separate from the near field non-traveling electromagnetic fields at a radio transmitter antenna .

It is not a matter of separating electromagnetic waves, but of diminishing importance of the interference patterns that control the near fields due to the small distance from the source and the shape of the source, the wavelength being the gauge.

What you call "non traveling " wave is a misnomer. It is a non traveling diffraction pattern. Diffraction patterns are dependent on the continuous emission of the electromagnetic wave from the particular topology of the antenna. At a distance, as the beam opens in angle and the energy density drops these diffraction patterns disappear and a dipole pattern of energy density dominates , if the antenna is a dipole.

- 236,935

A perfect oscillator may create electric field in a capacitor, and magnetic field in an inductor, and (being 'perfect') will not radiate. That's not useful for a radio, so we add a component, the antenna, that has both electric and magnetic fields, but which ALSO has a loss element, a resistive component to its impedance.

The energy loss in an antenna is intended to be radiation out into space of an electromagnetic wave. The OTHER parts of the energy provided to the antenna are NOT lost, they just cycle energy from inductor/magnetic field energy to capacitive/electric field energy, back and forth, just like an inductor and capacitor (LC tank circuit).

To evaluate an antenna design, the how-much-power-is-emitted question requires a calculation of the fields near the antenna, due to the supplied current at the transmitter frequency. That is important, because nearby objects (the transmitter tower, the ground) and the shape of the antenna elements, determines beam direction. The radiated intensity, though, in any given direction, will only depend on the way those local fields couple to a wave-equation traveling wave solution. So, after solving local fields, one also finds a second part solution, to the far field traveling wave that delivers radio to your receiver.

The calculation has two parts. The physical situation is only ONE part, but we can use approximations at a distance that wouldn't work up close to the antenna (where we care about different answers, anyhow).

- 10,853