It is known that optical fibres use light waves for faster communications and also in many other things like measuring and all.....everything is fine till the wave just fits into the fibre ....but what about when the optical fibres are made in such a way that their diameter is less than the distance between the wave's crest and the trough? these fibres act like a rail and the wave is like a train on it .....but the wave actally comes out of it and enters the fibre again right? If we reffer this phenomenon as tunneling(where the photon is continiously entering and exiting the fibre) then isnt there is also a probability(a considerable one) that the wave can leave the fibre? Even if it doesn't ...... shouldnt continious tunneling affect the probability amplitude(but it doesnt)?

2 Answers

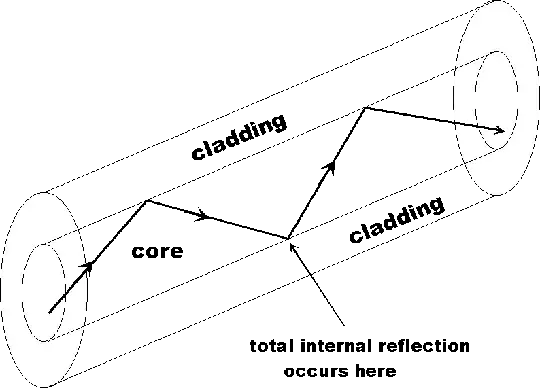

This is the absolute basic mechanism for transmission of light using optical fibres. The phenomenon of total internal reflection is at play here. The diameter being less than the wavelength, has no obvious effect on the transmission, apart from being almost impossible to construct.Also,they would be rather ineffective for carrying sufficient information. The cladding makes the fibers act as waveguides. The wave can leave the fiber. We know that there are losses in optical fiber transmission, minimizing which is the latest job of engineers in this field. Using wave functions to analyse tunneling effects is rather unnecessary, because in reality, losses occur due to a multitude of reasons, and tunneling losses make up less than 1-2% of these reasons.

MAIN REASONS FOR LOSSES(OPTICAL ATTENUATION):

1) Bending of fibers

2)Improper joining of 2 optical fiber cores, leading to losses at the junction.

3)Irregularities on the inner surface, can cause the reflected wave to be incident on the other surface at a 'bad' angle, which cannot undergo total internal reflection anymore, and escapes the core, causing information loss.

For a more mathematical approach in calculating losses in optical fibers, i suggest you read THIS PDF.

- 3,636

Optical fibers (the inner core) are normally wider than a couple wavelengths (8-9 $\mu m$ diameter for 1.5 $\mu m$ wavelength). In general, wave guides have a cut-off wave length (for each mode) beyond which no propagating waves (only damped waves) along the wave guide exist. For the lowest mode, this occurs when half the wavelength of the internal "plane wave" becomes comparable to the diameter of the guide. This is not completely true in dielectric waveguides like optical fibers. They use an outer (cladding) refractive index which is lower than the refractive index of the core so that due to total reflection at the operating wave lengths, only evanescent (no propagating) waves penetrate the outer dielectric which works like a perfect reflector. Increasing the wave length in an optical fiber is equivalent to decreasing the incident angle of the internal "plane wave" on the outer cladding so that eventually no total reflection occurs anymore and no true cut-off wave length exists. In this wave length region, propagating waves still exist along the optical fiber but their loss is very high due to the wave energy leaving the fiber. Therefore, waves with half wavelengths comparable to the diameter of the fiber core are not useful. Note: "Tunneling", i.e., loss of photons due to transmission through the cladding layer (in spite of being in the total reflection regime) plays only a minor role in the loss of propagating modes of the optical fiber.

Note: I found another post relevant for this topic:Why does a single mode fibre have a cutoff wavelength?

- 18,177