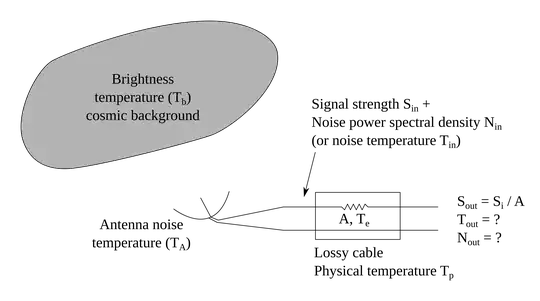

Assume a lossless antenna connected to a lossy cable at physical temperature 300K. If this antenna is pointed into space, the antenna noise temperature will depend on the brightness temperature of objects in its beam (cosmic background radiation in this case ignoring atmospheric radiation). Practically, the noise temperature of a Yagi antenna pointed into space is around 63-68K. However, as the physical temperature of the connecting cable is much higher, is this low antenna temperature relevant for a receiver system?

From what I understand, output noise of a passive device (lossy cable) is calculated as follows. Assuming that $T_{in}$ is the input noise temperature and $A$ is the attenuation of the device (lossy cable in this case), and $T_e=(A-1)T_p$ is the equivalent temperature of the device at physical temperature $T_p$. $$ \begin{align*} T_{out} &= \frac{T_{in} + T_e}{A} \\ &= \frac{T_{in}}{A} + \frac{(A-1)T_p}{A} \\ &= \frac{T_{in} - T_p}{A} + T_p \end{align*} $$ Converting to noise power spectral densities $$ N_{out} = \frac{N_{in} - N_{p}}{A} + N_p $$ This makes sense if $N_{in} \ge N_p$. The excess noise above the thermal noise floor is attenuated by the attenuation of the passive device, and the output noise has to be atleast the thermal noise at the physical temperature of the system.

My main question is does this equation hold if $N_{in} < N_p$, as in the case of the antenna connected to a lossy cable, where the noise captured by the antenna is lower than the thermal noise floor at the physical temperature of the system.

Assume all impedances are matched in the diagram.