$\newcommand{\mean}[1] {\left< #1 \right>}$

$\DeclareMathOperator{\D}{d\!}$

$\DeclareMathOperator{\pr}{p}$

Proof that $\beta = \frac{1}{k T}$ and that $S = k \ln \Omega$

This proof follows from only classical thermodynamics and the microcanonical ensemble.

It makes no assumptions about the analytic form of statistical entropy, nor does it involve the ideal gas law.

Pressure $P$ in the microcanonical ensemble

First recall that in a system described by the microcanonical ensemble, there are $\Omega$ possible microstates of the system.

The pressure of an individual microstate $i$ is given from mechanics as:

\begin{align}

P_i &= -\frac{\D E_i}{\D V}

\end{align}

When assuming only $P$-$V$ mechanical work, the energy of a microstate $E_i(N,V)$ is only dependent on two variables, $N$ and $V$.

Therefore, at constant composition $N$,

\begin{align}

P_i &= -\left( \frac{\partial E_i}{\partial V} \right)_N

\end{align}

The energy of an individual microstate is trivially independent of the number of microstates $\Omega$ in the ensemble (because the energy of a microstate is not a function of $\Omega$).

Therefore, the pressure of an individual microstate can also be expressed as

\begin{align}

P_i &= -\left( \frac{\partial E_i}{\partial V} \right)_{\Omega,N}

\end{align}

According to the Gibbs postulate of statistical mechanics, the macroscopic pressure of a system is given by the statistical average of the pressures of the individual microstates:

\begin{align}

P = \mean{P} &= \sum_i^\Omega \pr_i P_i

\end{align}

where $\pr_i$ is the equilibrium probability of microstate $i$.

For a microcanonical ensemble, all microstates have the same energy $E_i = E$, where $E$ is the energy of the system.

Therefore, from the fundamental assumption of statistical mechanics, all microcanonical microstates have the same probability at equilibrium

\begin{align}

\pr_i = \frac{1}{\Omega}

\end{align}

It follows that the pressure of a microcanonical system is given by

\begin{align}

P = \mean{P} &= -\sum_i^\Omega \frac{1}{\Omega} \left( \frac{\partial E_i}{\partial V} \right)_{\Omega,N} \\

&= -\left( \frac{\partial \left( \frac{\sum_i^\Omega E_i}{\Omega} \right) }{\partial V} \right)_{\Omega,N} \tag{1}\label{eq1}

\end{align}

The macroscopic energy $E$ of a microcanonical system is given by the average of the energies of the individual microstates in the ensemble:

\begin{align}

E = \mean{E} &= \frac{\sum_i^\Omega E_i}{\Omega}

\end{align}

Substituting this into $\eqref{eq1}$ above, we see that the pressure of a microcanonical system $P$ can also be expressed as

\begin{align}

P &= -\left( \frac{\partial E}{\partial V} \right)_{\Omega,N}

\tag{2}\label{eq2}

\end{align}

This expression for the pressure $P$ of a microcanonical system (derived wholly from statistical mechanics) can be compared to the classical expression

\begin{align}

P &= -\left( \frac{\partial E}{\partial V} \right)_{S,N}

\end{align}

which immediately suggests a functional relationship between entropy $S$ and $\Omega$.

Identification of $\frac{1}{\beta} \D \ln\Omega$ with $T \D S$

In the microcanonical ensemble, energy $E$ is a function of $\Omega$, $V$, and $N$.

We now take the total differential of the energy $E(\Omega, V, N)$ for a microcanonical system at constant composition $N$:

\begin{align}

\D E = \left(\frac{\partial E}{\partial \ln \Omega}\right)_{V, N} \D \ln\Omega + \left(\frac{\partial E}{\partial V}\right)_{\ln \Omega, N} \D V

\tag{3}\label{eq3}

\end{align}

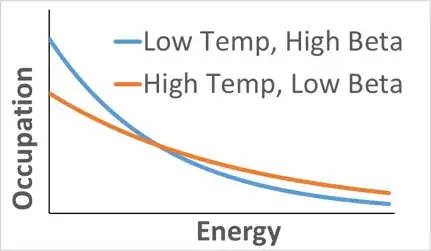

As stated in the OP, for the microcanonical ensemble, the condition for thermal equilibrium is sharing the same value of $\beta$:

\begin{align}

\beta &= \left( \frac{\partial \ln \Omega}{\partial E} \right)_{V,N}

\tag{4}\label{eq4}

\end{align}

After substituting \eqref{eq2} and \eqref{eq4} into \eqref{eq3} we have:

\begin{align}

\D E = \frac{1}{\beta} \D \ln\Omega - P \D V

\end{align}

Compare with the classical first law of thermodynamics for a system at constant composition $N$:

\begin{align}

\D E = T \D S - P \D V

\end{align}

Because these equations are equal, we see that

\begin{align}

T \D S &= \frac{1}{\beta} \D \ln\Omega \\

\D S &= \frac{1}{T \beta} \D \ln\Omega \\

\D S &= k \D \ln\Omega \tag{5}\label{eq5}

\end{align}

where

\begin{align}

k &= \frac{1}{T \beta}

\end{align}

$k$ is a universal constant independent of state and composition

At this point it is not immediately obvious that $k$ is a constant. In $\eqref{eq5}$, $k$ could be a function of $\ln \Omega$ and/or a function of $N$ (since $N$ was held constant in the derivation of $\eqref{eq5}$). In this section we show that $k$ is a universal constant, independent of all state functions, including $N$.

Recall from the derivation of $\beta$, when two or more systems are in thermal equilibrium they necessarily share the same $\beta$ and the same $T$, yet they generally share no other state function (i.e., each system can have its own $E$, $\Omega$, $V$, $N$, composition, etc). Thus $\beta(T)$ is an invertible function of $T$ and only $T$. More formally, we have

\begin{align}

\beta(T,X) = \beta(T)

\end{align}

for all state functions $X$.

It follows that $k = \frac{1}{T \beta(T)}$ likewise can be a function of only $T$ ($k$ could also of course be a constant); $k$ is not a function of $N$. From $\eqref{eq5}$, both $\D S$ and $\D \ln\Omega$ are exact differentials, so $k$ must be either a function of $\Omega$ or a constant. But we have already established that $k$ cannot be a function of any state variable other than $T$, so therefore $k$ must be a constant, independent of $\Omega$, $N$, and all other state functions.

Alternatively, since $\D S$ and $\D \ln\Omega$ are both extensive quantities, $k$ cannot depend on $\Omega$ and must be a constant (see Addendum below for detailed proof).

Therefore

\begin{align}

\beta &= \frac{1}{k T}

\end{align}

where $k$ is a universal constant that is independent of composition and state.

Integration and third law give $S = k \ln\Omega$

By integrating $\eqref{eq5}$ over $E$ and $V$, we have

\begin{align}

S &= k \ln\Omega + C(N)

\end{align}

where $C$ is a constant that is independent of $E$ and $V$, but may depend on $N$.

By invoking the third law (essentially $S=0$ when $T=0$ for all pure perfect crystalline systems) we conclude that $C$ must be independent of $N$, composition, and all other state functions. Since the constant $C$ is empirically vacuous, we can thus freely choose to set $C=0$ and arrive at the famous Boltzmann expression for the entropy of a microcanonical system:

\begin{align}

S &= k \ln\Omega

\end{align}

ADDENDUM:

Proof that $k=\frac{1}{T \beta}$ is a constant and cannot be a function of $\Omega$

This follows directly from the definition of an extensive function.

Let's start with this relation

\begin{align}

\D S &= \frac{1}{T \beta} \D \ln\Omega

\end{align}

and express it for clarity as

\begin{align}

\D S &= k \D Z

\end{align}

where $Z = \ln\Omega$ and $k = \frac{1}{T \beta}$ is either a constant or a function of $Z$ (and consequently of $\Omega$).

Because this equation expresses a total differential for two exact differentials, $S$ is a function of only $Z$.

We rewrite this equation to explicitly incorporate these features:

\begin{align}

\D S(Z) &= k(Z) \D Z \tag{6}\label{eq6}

\end{align}

Now from the definition of an extensive function we know that

\begin{align}

\D S(\lambda Z) &= \lambda \D S(Z)

\end{align}

where $\lambda$ is an arbitrary constant scalar factor.

Also,

\begin{align}

\D S(\lambda Z) &= k(\lambda Z) \D (\lambda Z) \\

\lambda \D S(Z) &= \lambda k(\lambda Z) \D Z \\

\D S(Z) &= k(\lambda Z) \D Z

\end{align}

by comparison with equation $\eqref{eq6}$ above we see that this implies

\begin{align}

k(\lambda Z) &= k(Z)

\end{align}

and so we have shown that $k$ is a constant independent of $Z$ and $\Omega$.