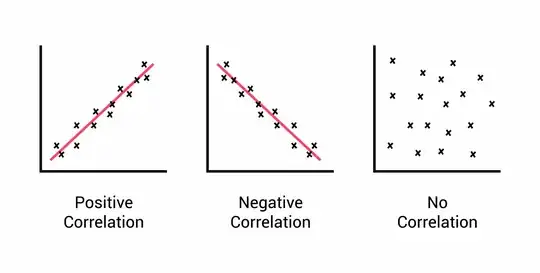

The correlation function measures, as you would expect, how correlated two random variables are. That is, how often two random variables have similar values.

We can construct such a function very simply. Say you are flipping coins, and you want to know if their results are correlated. To quantify things, call "heads" $+1$ and "tails" $-1$. To make things concrete, lets say the results from flipping each coin 5 times are:

$$

\begin{align}

\text{Coin } A:&\ +, -, -, +, - \\

\text{Coin } B:&\ -, -, -, -, + \\

\text{Coin } C:&\ +, -, +, +, - \\

\text{Coin } D:&\ -, +, -, -, + \\

\end{align}

$$

To measure how "correlated" the a pair of results are, we want a function that is positive when the two results are similar, and negative when they are dissimilar. The easiest function is multiplication: it will be positive when the coins have the same result (++ or --) and negative when they differ (+- and -+). We can multiply each trial, then average the results to get an overall estimate of how similar the results are:

$$

\begin{align}

C_{AB} &= \frac{-1+1+1-1-1}{5} = 0.2 \\

C_{AC} &= \frac{+1+1-1+1+1}{5} = 0.6 \\

C_{AD} &= \frac{-1-1+1-1-1}{5} = -0.6 \\

\end{align}

$$

So $A$ and $B$ seem pretty uncorrelated, $A$ and $C$ may be correlated, and $A$ and $D$ are anti-correlated.

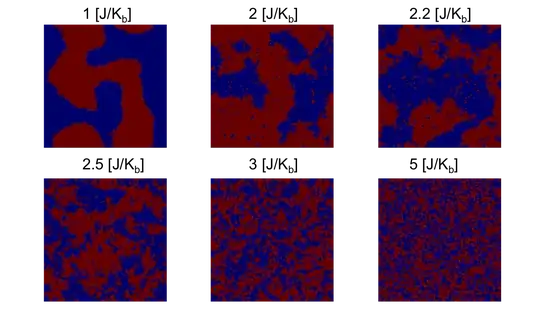

The principle is exactly the same in statistical mechanics. You have two sets of random variables, the density at $x_1$ and the density at $x_2$. The variables are random, because you don't know what the exact density field $n(x)$ is, you only have a probability distribution (e.g. Boltzmann) over many possible $n(x)$'s. To calculate the correlation of $n(x_1)$ and $n(x_2)$, multiply them for every realization of $n(x)$ and average the results. We write this as:

$$

C_{nn}(x_1, x_2) = \langle n(x_1) n(x_2)\rangle

$$

Now, you mentioned the covariance in your comment. It could be that for your problem $C_nn(x_1, x_2)$ does not give you the information you want. Perhaps $\langle n(x_1)\rangle$ and $\langle n(x_2)\rangle$ are different, but both large and positive. Then the average you do in $C_{nn}$ will be dominated by just multiplying the average density of both fields. In cases like this, you may be interested not in the correlation of $n(x_1)$ and $n(x_2)$ themselves, but in correlations of their fluctuations from equilibrium: $n(x) - \langle n(x)\rangle$. This correlation function is the covariance!

$$

\begin{align}

C_{n-\bar{n},n-\bar{n}}(x_1, x_2) &= \Big \langle \big (n(x_1)-\langle n(x_1) \rangle \big ) \big (n(x_2)-\langle n(x_2) \rangle \big ) \Big \rangle \\

&= \Big \langle n(x_1)n(x_2) \Big \rangle - \Big \langle n(x_1)\langle n(x_2)\rangle \Big \rangle - \Big \langle \langle n(x_1) \rangle n(x_2) \Big \rangle + \langle n(x_1)\rangle \langle n(x_2) \rangle \\

&= \Big \langle n(x_1)n(x_2) \Big \rangle - \Big \langle n(x_1)\Big \rangle \Big \langle n(x_2) \Big \rangle \\

&= Cov(n(x_1),n(x_2))

\end{align}

$$