I would like to experiment with a platform that can run a virtual assistant (think e.g. https://mycroft.ai/) so to perform tasks like speech-to-text, conversations, text-to-speech, image segmentation and object detection (e.g. when fed with an RTSP stream).

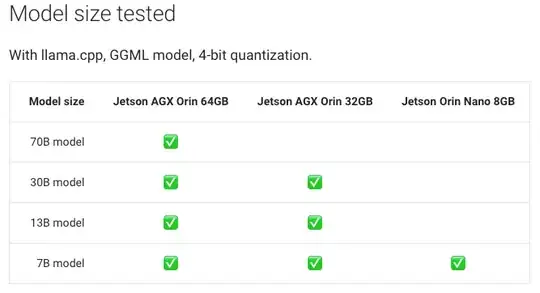

I'm also curious about performing inference with large language models (LLMs), like those from Facebook Research's LLaMA (https://github.com/facebookresearch/llama).

Would a platform like Nvdia Jetson Orin Nano (8GB RAM) support LLMs inference, or would it still be underpowered, e.g. memory-constrained? What would be the specs I should aim for? I would use mainly pytorch as underlying framework.