I'm building a circuit to monitor AC current for my house and I have

run into a need (in software) to multiply the max ADC value for a

given sampling by 0.707 to scale the reading to obtain the correct

amperage.

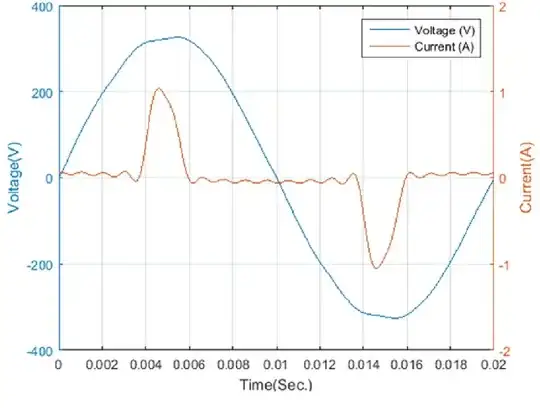

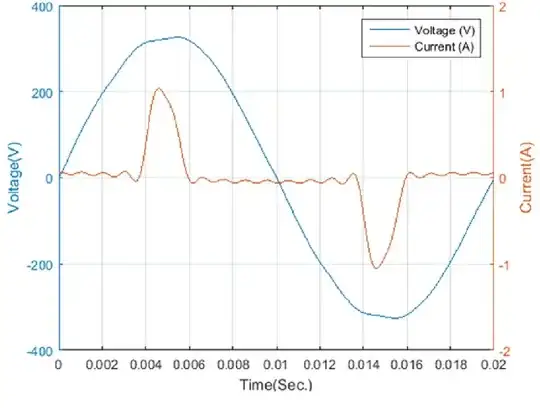

The AC current in your house will not look very sinusoidal so any simple "one-size-fits-all" multiplier that converts the peak value to the RMS is going to produce significantly inaccurate results. If you want accuracy, sample at a fairly decent rate (circa 1 kHz for 50 Hz AC mains), square each sample, sum each squared value over an appropriate period (say 1 second) then divide by the number of samples taken in that one second interval. Finally, take the square root to get the true RMS value.

This is what "RMS" means; it's the square root of the mean of the squared values.

Why multiply by cos(pi/4)

Somebody's daft idea of converting values to RMS. It only works for sinewaves and AC current is nearly always strongly non-sinusoidal. This is an example from ReseachGate that shows the typical current taken by a TV: -