I'm using the STM32 uC for quite a long time, from F1,F2,F3,F4 to F7. In one application I changed from the F4 (100 MHz) to the F7 (200 MHz), but this seems like it was a mistake.

The application run on the F4 with around 15kHz, on the F7 with around 12 kHz, although the F7 runs on double the clock speed. So it seems, that the two processors have different FPU architectures and as I read, the F4 has some parallelism for the FPU while the F7 can only do sequential operation.

So is it true, that for an application with heavy FPU load, an F4 outperforms an F7?

Edit:

So I made some measurements on real hardware to verify my toughts:

Hardware: STM32F722RC vs STM32F412CE

Programm: Just some FPU operations as used in my application

for(uint16_t i = 0; i < 2000; i++)

{

if(x > 6)

{

x = 0.1f;

} else if (x < -6)

{

x = -0.1f;

}

x = x + 0.05f;

x = x + sinf(x)*cosf(x);

}

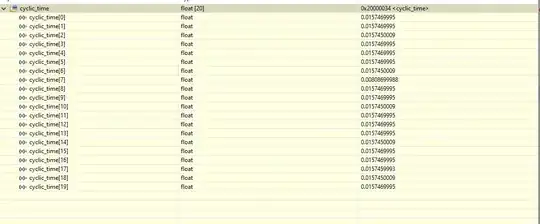

cyclic_time[ptr] = htim6.Instance->CNT;

cyclic_time[ptr] /= 1e6f;

ptr++;

if(ptr >= 20)

{

ptr = 0;

}

htim6.Instance->CNT=0;

--> So an average cycle time of around 7.365ms

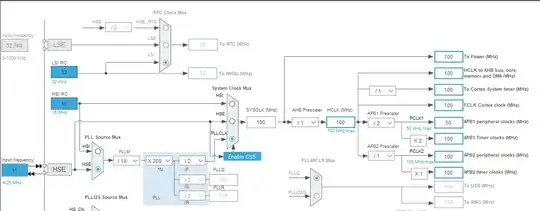

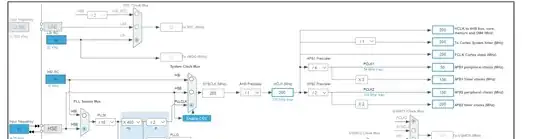

--> So an average cycle time of around 9.954ms @ 200Mhz best case (I verified, that in both cases the timer runs on the correct clock speed, 100Mhz and 99 Prescaler, such that the measurement is correct)

So that is exactly what I observed in my real application. Somehow the F4 outperformance the F7 when it comes to floating point operations.

Edit2:

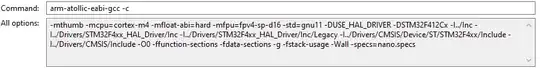

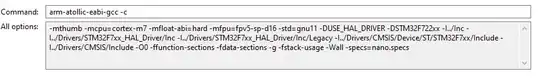

Edit3: To ensure, that the optimizer is not a problem, I tested the cycle time with the optimizer enabled on speed:

So this leads to the same problem.

Projects for F4 and F7: http://www.mediafire.com/file/lljhgsez4xk9vat/Test_Projects.rar/file

forloop would get compiled away and replaced with a constant assignment. Also, don't underestimate how complicatedsinf/cosfare: depending on your math lib, this might mostly be control flow or soft floating point operations! – Marcus Müller Jul 04 '20 at 10:44objdump -D -Son the object (.o) files produced by yourgcc -ccalls and compare them (e.g. withdiff) – Marcus Müller Jul 04 '20 at 11:27