Apologies in advance if I chose the wrong forum - this is mostly a software question; however, I thought it would be irrelevant to most on StackOverflow that don't do electrical engineering, so I hoped I could get an answer here. If not, please advise a more appropriate place where this question can be moved.

I'm using PulseView (sigrok) on Windows 10 installed via:

I want to analyse multichannel raw analog (binary) data in PulseView. First problem I had, was not knowing how PulseView interprets multi-channel data - interleaved, or not (that is, channel 1 in its entirety, followed by channel 2 in its entirety).

Eventually, I've made this simple test in Python:

import struct

with open('binfile', 'wb') as f:

for ix in range(256):

f.write(struct.pack("=B", ix))

Then, I imported this via Open icon in PulseView/"Import Raw analog data without header..." (remember to set the file filter to "All files", otherwise binfile will not be listed, as only .raw or .bin file extensions are shown by default), and used:

- Data format: U8 (8-bit unsigned int)

- Number of analog channels: 10

- Sample rate (Hz): 0

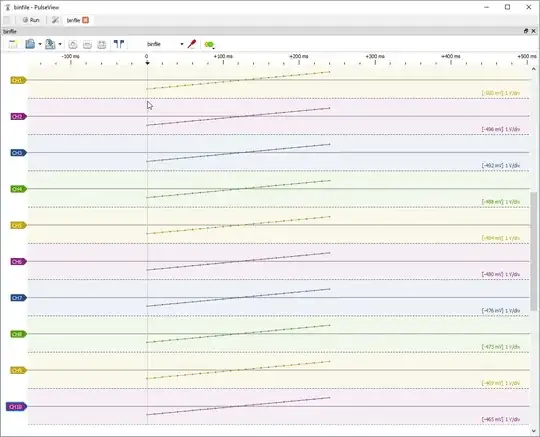

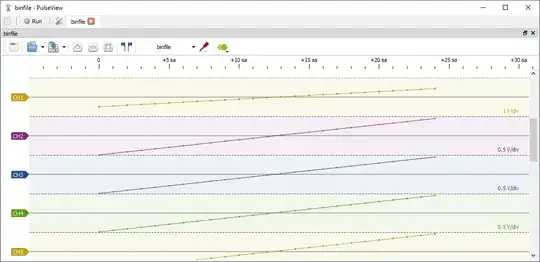

This imports, and gives this plot (after manually setting all "Vertical Resolution" to 1V/DIV, accessed when you left-click the CH1 etc labels which sit at the left of the tracks):

As it can be seen, when the cursor is over the leftmost sample in the multitrack, the values we get are: -500 mV, -496 mV, -492 mV, -488 mV, -484 mV, -480 mV, -476 mV -- which for me, means that PulseView interpreted the data in binfile, to be interleaved, as in:

byte1|byte2|byte3|...|byte10|byt11|byt12|byt13|.....

==

ch1s1|ch2s1|ch3s1|...|ch10s1|ch1s2|ch2s2|ch3s2|.....

So that question is solved (hopefully, unless I interpreted it wrongly)

What I still don't get, is:

- Why did PulseView decide to map byte value 0x00 to -500 mV, and byte value 0xFF to 500 mV? In other words, how did it decide on this default range - is this documented anywhere, and can I change the interpreted analog range (in volts)?

- When I use Sample rate (Hz): 0, PulseView seemingly choses 10 ms as sample period by default. Why is this number chosen, and is this documented anywhere? (I expect if I enter a proper sampling rate, then I'll get the corresponding sampling period instead).