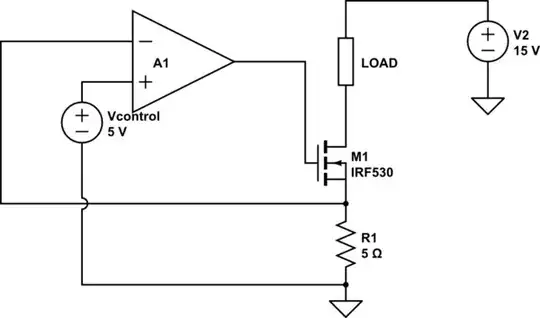

Sorry if my question is too naive, I'm new to this. I am trying to design a controlled current source for currents up to about 1A (diagram below). My requirement is that the current depend only on the value of a single voltage source and a single resistance (which I can precisely choose).

simulate this circuit – Schematic created using CircuitLab

The problem is that the output voltage of the op-amp = gate voltage of MOSFET is becoming equal to the rail voltage, causing saturation at about 300mA itself (i.e. before reaching 1A). Is there a way I can improve the above circuit to prevent the op-amp from saturating and getting a 1A current?

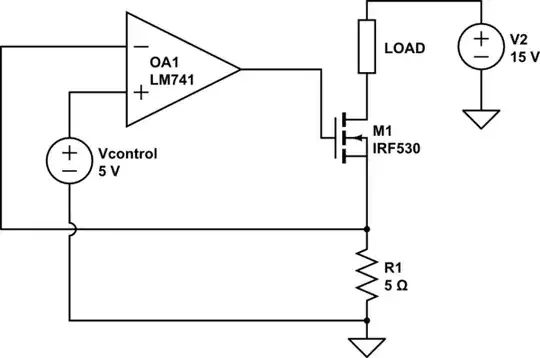

EDIT: I've changed the design following τεκ's answer and swapped the FET and Load. This should ensure that FET's source voltage doesn't go above 5V, but the gate voltage still goes to 14V and saturates the op-amp. Thanks in advance.