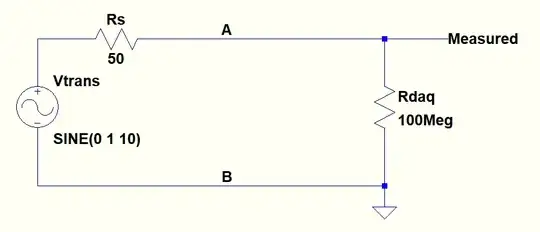

There is an industrial amplifier for force transducers. It amplifies strain gauge outputs and outputs voltage. Lets call this as a voltage source Vtrans. Its output is around 50 Ohm.

I measure it with a data-acquisition board as follows:

The max interest of signal component is 200Hz and the sampling rate is 500Hz.

The thing is there was 50Hz noise in the sampled data if wired as above. But when I put a 1uF resistor across the terminals A and B, the 50Hz interference disappeared. But if it was a low pass filter it would also remove anything around 50Hz but no. This cap gets rid of only 50Hz, not the signal component.

This 50Hz appears in FFT and affects standard deviation if 1uF cap is not added. And more interestingly it even exists even I power the amplifier with battery but not a power supply.So I dont know where it comes from.

What kind of inference is this(common mode?) and how can you model it to simulate so that 50Hz is removed but any other Vtrans signal almost perfectly passes when a 1uF added across A and B? I mean how can I modify the above circuit to mimic the real scansion?

EDIT:

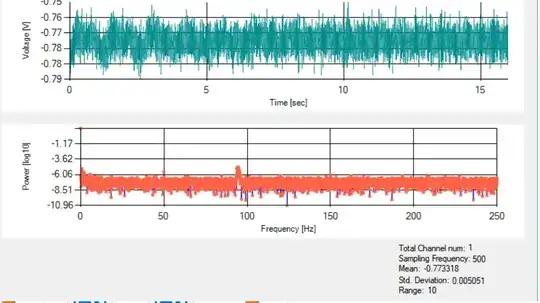

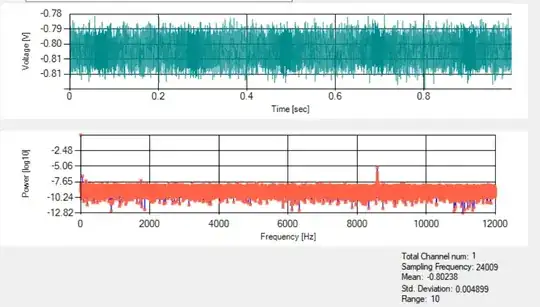

Below plots show the output in time and FFT without any capacitor for 500Hz and 24kHz sampling rates:

Sampled with 500Hz:

Sampled with 24kHz:

And below sampled at 666Hz (see FFT peak shifted comparing to 500Hz sampling rate)):