All things are imperfect and an "ideal" is defined. e.g., an ideal resistor behaves as R = V/I.

In the manufacturing process "quality control" is essentially figuring out how to get closer to the ideal. It is a recursive feedback processes. Create->Measure->Modify->Create. This is called "learning".

The measurement process is simple. Take your device, measure it's properties and compare them to what you want(the ideal).

Of course, what really is the ideal? That is defined differently by different people. For consumer, it is different than military, etc.

Suppose your ideal includes a lifespan of, say, 1000 years. You want the device to only vary by 1% over. Well, you can measure that. If it is a variation of 1% over 1000 years, then that is 1/100% over 10 years. (We assume linearity, which we can approximately measure).

Suppose we want 1% over a temperature range of 100C. Again, same thing goes on. We set up a mock use case and do measurements.

The measurements are what tells us the behavior of our devices. After all, all devices are the same but with just radically different properties. An inductor is a resistor is a capacitor but all just have different behaviors. A capacitor is a very bad resistor, and we could, just throw all such devices away. But a capacitor has a very good uses. Any resistor that behaves like a capacitor is a capacitor. Seems nonsensical but it is how we come up with stuff. Everything is useful, it is just a matter of finding the application for it.

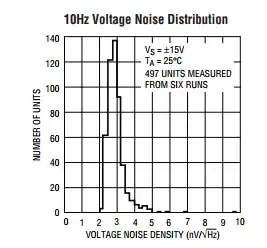

So, which statistics and measurement we can get a profile about how a device behaves in the "real world". We then modify our manufacturing processes to get the desired quality we need to make it affordable for our target audience.

Now, as far as the statistics is concerned, yes, there is a chance a single device may deviate quite substantially. But if the quality control is tightly regulated, one can make, say, 1 in 10 billion not have any significant differences.

Statistics only gives you an estimation. You have confidence intervals, say like 99.999% of all the resistors created by this process will be within 1%. A few will be a little outside 1% and even fewer will be further out... basically depending on the distribution, which is usually bell curved.

That could seem like a big deal, considering trillions of transistors are used in a cpu!

BUT don't forget that the user of the components also has to make the circuit robust. Almost all circuits are not so critical that a small 0.001% deviation will create a failure. If they did, most things will fail.

Most circuits are rather robust, even with significant deviations. Maybe your cpu runs a little hotter or slower or has a 0.000001% more likelihood to crash than mine, but you do not notice this, nor do I.

The people that design the components are usually smart enough to not create devices or circuits that have a high likelihood of failure. Why? Because they are responsible for the issues. Intel will rather improve their quality control rather than have to replace millions of cpu's.

This is also why most datasheets give you some idea about the quality of component and also expect you to stay within reason. Resistors, capacitors, and inductors are expected to work within reasonable usage. If you use it in an application that might cause a failure then it is probably your fault if it fails.

Life is not so cut an dry and neither is electronics or anything else. Variations happen and are all over the place. The average person generally doesn't notice simply because the variations are "within" spec. They only notice when something goes wrong, of course, but those are the extreme cases.