USB seems to have only 3.3V as a high level on the data pins, whereas

the voltage supplied by USB is 5V. What is the reason behind that?

Short answer is: mostly economics. USB framework was envisioned as an universal connectivity between personal computers and multitude of their of peripherals, to replace multi-pin connectors as RS-232 and Parallel Ports. This is/was a market for billions of units. Therefore, to be adopted and be successful, the new (in 1995) standard must be cheap, so re-using existing silicon technology and power distribution standards at that time would achieve this goal.

As examples from many comments and answers show, technically there is no much relationship between the level of power supply and amplitude of digital signals. The function of USB is two-fold - provide power to a peripheral, and to transfer information. These functions have somewhat different aims, and it should be of no surprise that heir voltage levels are different.

In 1995 the most developed power supply technology did have the mainstream voltage level of +5V, all primary power was based on +5V. The +5V is still the primary supply in personal computing today. So the choice of power delivery for USB peripherals as +5V was dictated by economy of scale in PC market.

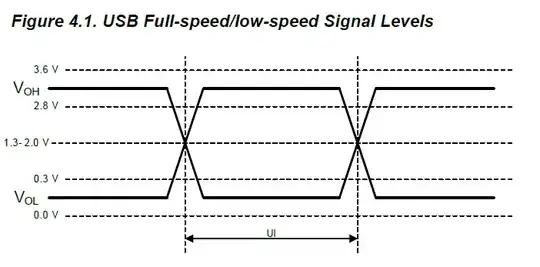

Same goes for the choice +3.3V signaling amplitude, as 3.3V CMOS technology took over all older 5-V TTL (and other) drivers in consumer segment (at that time), and also provided a path for integration of USB PHY with data-processing IC. Most common I/O voltage levels on older MCU is 3.3V.

We also need to keep in mind that whoever has a leading position in development of some technology, they would dictate the selection of component base (at the time of inception of the standard). This was the era of first Intel Pentium processors.