I am reading about sliding protocols and in almost every book its written this:

Frames have sequence number 0 to maximum \$2^n - 1\$.

Why is the maximum \$2^n-1\$?

I am reading about sliding protocols and in almost every book its written this:

Frames have sequence number 0 to maximum \$2^n - 1\$.

Why is the maximum \$2^n-1\$?

I interpreted your question as one that is a very famous case in TCP of failing to use twice the number of sequence numbers as you would appear to need. The rule is that you may only have sequence numbers in flight (a maximum window size) that is at most half the number of sequence numbers possible given the bits you set aside for them. An example: if you have 11 bits set aside for sequence numbers in your header, you can generate 2048 different integers. You may only ever send at most 1024 bytes in a single window's worth of data.

As you generate packets in TCP, for example, you will indicate the number of the first byte in sequence in the sequence number field. Once you reach the last number, you cycle back to 0. These are byte numbers not packet numbers. So why this rule? Because it's possible to have a receiver mis-interpret a sequence number to apply to the wrong byte in a stream.

An example: you pick a scheme with 4 sequence numbers, 2 bits. You go about numbering bytes as you send them, 0, 1, 2, 3, 0, 1, 2, 3. The receiver is expecting 0, 1, 2, 3 and as the arrive, it makes them available to the waiting application and sends back an acknowledgment. But consider what happens when your four bytes are sent and arrive successfully but the acknowledgments are lost: the receiver has advanced his window expecting the second round of bytes numbered 0, 1, 2, 3, having received the prior series. The sender, however, eventually resends the original 0, 1, 2, 3 since they were never acknowledged. It is resending its first 0, but the receiver will interpret it as the second 0 and make it available to the waiting application. Disaster. The only way to avoid this is to always have twice the number of sequence numbers than you can have bytes in-flight, sent but waiting acknowledgment.

I think you mean from \$0\$ to a maximum of \$2^n - 1\$.

That's because to represent from \$0\$ to a maximum of \$2^n\$ you'd need another a whole extra bit in binary.

For instance if \$n=8\$ then you have values from 0 to 256 (\$2^8\$). That's 257 values (zero counts!) which requires 9 bits, but 0 to 255 (\$2^8 - 1\$) is the entire range of 8 bits or 256 values.

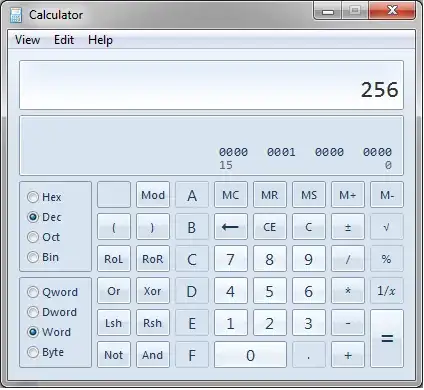

If you have Windows 7, you can play with the "Programmer's" mode on the calculator. It helps visualize some of these concepts. I use it all the time, especially when writing assembly.

Communication protocols usually use rigid message formats which are defined down to the level of octets or individual bits. The "headers" of the protocol messages use simple, fixed-width data types such as 8, 16 or 32 bit numbers.

When such a data type represents a message sequence number, it makes sense to treat that data type as holding a pure binary representation.

The maximum value of an N-bit binary number is \$2^{N} - 1\$.

The particular width N is chosen based on various criteria, such as how deep is the sliding window of the protocol, and also the likelihood of problems caused by replayed sequence numbers, and whether there is a need to minimize the size of the protocol header (because the systems which use the protocol are very small, and the network has very low bandwidth or whatever). Also, the consideration whether the sequence number enumerates packets, or whether it also gives a position in a byte stream (as do TCP sequence numbers).

In some situations, using a sequence number which is only 8 bits wide, or even narrower, could be acceptable.

An 3 bit sequence number is not possible if there can be more than eight unacknowledged packets in the sliding window. The first 7 packets can be transmitted with sequence numbers 0 to 7. Then the next sequence number wraps to 0. If packet 0 has not yet been acknowledged as having been received, then sequence number 0 cannot be reused. Because packet 0 could have been dropped by the network, and the newly transmitted packet 0 (which is actually packet #8) will be mistaken by the remote end as the first packet, corrupting the transmitted stream.

Note that the range 0 to 7 isn't necessarily enough. Suppose that the other end is not acknowledging the first packet, so it has to be retransmitted several times. Suppose it then acknowledges the packet, and so the protocol advances to packet 8, which re-uses sequence 0. but suppose that there are still copies of the original packet 0 in transit. These packets are ambiguous against the new 0. For this reason, protocols have to take into account the network. Does the network have routers which store and forward many packets? If so, you need a sequence number range well in excess of your sliding window size, so that when sequence numbers are recycled, there is no chance of such ambiguity, or at least the chance is vanishingly small.

A discussion about designing protocols and protocol packet formats is "too broad"; moreover, this site might not be the best fit, unless it is about a specific protocol used in embedded systems.

"2n-1" should probably be written "2n -1", where thte sequence numbers occuply n bits. If the sequence number occupies 3 bits, the possible value are 0 - 7, because 23 is 8