Moments after copying a large file on my primary SSD, the IO PSI value goes up to more than 90% and the system becomes very unresponsive. This takes around a minute to go back to normal.

I also noticed freezing problems when doing things like docker pull, but was able to reproduce what I believe to be the same problem, using rsync now.

The problem does not seem to happen when the test file is located in the second SSD.

Reproducible example:

Inspired by this post I am logging load and IO PSI to a CSV file every second.

In the first terminal session I run

rm monitor.csv; t=0; while true; do t=$(($t+1)); echo $s,$(cat /proc/loadavg | cut -d ' ' -f1),$(grep some /proc/pressure/cpu | cut -d' ' -f2 | cut -d= -f2),$(grep some /proc/pressure/io | cut -d' ' -f2 | cut -d= -f2),$(grep full /proc/pressure/io | cut -d' ' -f2 | cut -d= -f2) >> monitor.csv; sleep 1; done

I copy a 3,3 GB testfile on the primary SSD:

rm test.zip ; echo "start rsync" >> monitor.csv ; rsync original.zip test.zip ; echo "end rsync" >> monitor.csv

Now the monitor.csv looks like this:

1,0.64,0.18,0.00,0.00

2,0.67,0.14,0.00,0.00

3,0.67,0.14,0.00,0.00

4,0.67,0.12,0.00,0.00

start rsync

5,0.67,0.12,0.00,0.00

6,0.67,0.09,0.00,0.00

7,0.70,0.09,0.00,0.00

8,0.70,0.08,0.00,0.00

end rsync

9,0.70,0.08,0.00,0.00

10,0.70,0.42,6.15,5.79

11,0.70,0.42,6.15,5.79

12,0.80,0.35,16.99,15.97

13,0.80,0.35,16.99,15.97

14,0.80,0.28,30.94,29.38

15,0.80,0.28,30.94,29.38

16,1.14,0.23,43.09,41.27

17,1.14,0.23,51.59,49.73

18,1.14,0.19,51.59,49.73

19,1.14,0.19,51.59,49.73

20,1.14,0.33,58.00,55.40

21,2.57,0.27,62.89,60.22

22,2.57,0.27,62.89,60.22

23,2.57,0.22,67.80,65.25

24,2.57,0.22,67.80,65.25

25,2.57,0.18,72.37,69.73

26,3.57,0.18,72.37,69.73

27,3.57,0.15,75.38,72.68

28,3.57,0.15,75.38,72.68

29,3.57,0.12,79.48,76.90

30,3.57,0.12,79.48,76.90

31,4.56,0.10,83.01,80.36

32,4.56,0.10,83.01,80.36

33,4.56,0.08,84.46,81.38

34,4.56,0.08,84.46,81.38

35,4.56,0.06,86.37,83.12

36,5.24,0.06,86.37,83.12

37,5.24,0.05,86.66,83.10

38,5.24,0.05,86.66,83.10

39,5.24,0.04,87.09,82.90

40,5.24,0.04,87.09,82.90

41,5.94,0.03,84.71,80.02

42,5.94,0.03,84.71,80.02

43,5.94,0.02,84.77,79.65

44,5.94,0.02,84.77,79.65

45,5.94,0.02,86.26,80.08

46,6.91,0.02,86.26,80.08

47,6.91,0.01,86.93,80.42

48,6.91,0.01,86.93,80.42

49,6.91,0.01,86.04,79.80

50,6.91,0.01,86.04,79.80

51,7.95,0.01,85.67,79.29

52,7.95,0.01,85.67,79.29

53,7.95,0.01,86.45,79.78

54,7.95,0.01,86.45,79.78

55,7.95,0.00,86.73,79.82

56,8.12,0.00,86.73,79.82

57,8.12,0.18,80.08,73.69

58,8.12,0.18,80.08,73.69

59,8.12,0.33,65.57,60.34

60,7.55,0.33,65.57,60.34

61,7.55,0.27,54.05,49.77

62,7.55,0.27,54.05,49.77

63,7.55,0.22,44.26,40.76

64,7.55,0.18,36.24,33.37

65,7.02,0.18,36.24,33.37

66,7.02,0.18,36.24,33.37

67,7.02,0.14,29.67,27.32

68,7.02,0.12,24.30,22.37

69,7.02,0.12,24.30,22.37

70,6.46,0.10,19.89,18.32

71,6.46,0.10,19.89,18.32

72,6.46,0.08,16.29,15.00

73,6.46,0.08,16.29,15.00

74,6.46,0.06,13.34,12.28

75,5.94,0.06,13.34,12.28

76,5.94,0.05,10.92,10.06

77,5.94,0.05,10.92,10.06

78,5.94,0.04,8.94,8.23

79,5.94,0.04,8.94,8.23

80,5.55,0.03,7.32,6.74

81,5.55,0.03,7.32,6.74

82,5.55,0.02,5.99,5.52

83,5.55,0.02,5.99,5.52

84,5.55,0.02,4.91,4.52

85,5.10,0.02,4.91,4.52

86,5.10,0.01,4.02,3.70

87,5.10,0.01,4.02,3.70

88,5.10,0.01,3.29,3.03

89,5.10,0.01,3.29,3.03

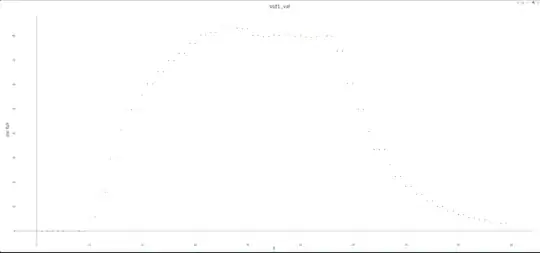

Chart:

- copying the file takes only ~ 5 seconds

- After that the PSI goes very high, and normalizes after a minute

- The system GUI becomes very unresponsive during that time

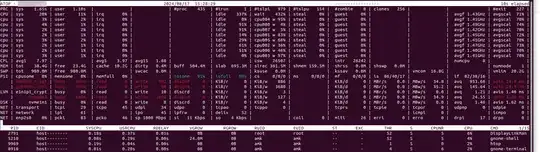

Also a warning is blinking in atop after the copy process:

The same operation with the file located on the second SSD takes a bit longer, but the system does not freeze and the IO PSI value does not spike so badly:

1,2.42,0.00,0.01,0.01

2,2.42,0.00,0.01,0.01

3,2.42,0.00,0.01,0.00

4,2.23,0.00,0.01,0.00

start rsync

5,2.23,0.00,0.00,0.00

6,2.23,0.00,0.00,0.00

7,2.23,0.00,1.27,1.09

8,2.23,0.00,1.27,1.09

9,2.13,0.00,2.85,2.70

10,2.13,0.00,2.85,2.70

11,2.13,0.18,3.42,3.30

12,2.13,0.18,3.42,3.30

13,2.13,0.14,4.25,4.15

14,2.04,0.14,4.25,4.15

15,2.04,0.12,5.11,5.03

16,2.04,0.12,5.11,5.03

17,2.04,3.72,9.44,7.02

end rsync

18,2.04,3.72,9.44,7.02

19,1.88,3.04,8.63,6.65

20,1.88,3.04,8.63,6.65

21,1.88,2.49,7.07,5.44

22,1.88,2.49,7.07,5.44

23,1.73,2.04,5.79,4.46

24,1.73,2.04,5.79,4.46

25,1.73,1.67,4.74,3.65

26,1.73,1.67,4.74,3.65

27,1.73,1.36,3.88,2.99

28,1.59,1.36,3.88,2.99

29,1.59,1.12,3.17,2.44

30,1.59,1.12,3.17,2.44

System Details

Lenovo E14

Ubuntu 22.04

Gnome & Wayland

CPU AMD Ryzen 7 4700U with Radeon Graphics

2 NVME SSDs built in, both with encrypted file system

- primary SSD: (mvme0)

- Model Number: KBG40ZNT512G TOSHIBA MEMORY

- Firmware Version: 0109AELA (seems to be the latest available)

- secondary SSD: (nvme1)

- Model Number: CT500P3SSD8

- primary SSD: (mvme0)

Schedulers are the same on both devices:

$ cat /sys/block/nvme0n1/queue/scheduler

[none] mq-deadline

$ cat /sys/block/nvme1n1/queue/scheduler

[none] mq-deadline

Theories / Questions:

- is the primary SSD just broken?

- does it have to do with a limited amount of PCI-E channels in the system?

Tried

- I tried Kernel 6.8.0-40-generic (standard on my system), as well as 6.5.0-45 - but behave the same in this regards.

EDIT1 (as per @ubfan1's comment)

- Trim seems to run more on the second device (without the problem). On the primary device it does not run so often - is this good or bad?

$ journalctl |grep "fstrim\["|grep nvme0

Jul 01 08:32:31 example fstrim[27758]: /boot/efi: 504.9 MiB (529436672 bytes) trimmed on /dev/nvme0n1p1

Jul 01 08:32:31 example fstrim[27758]: /boot: 1.2 GiB (1269686272 bytes) trimmed on /dev/nvme0n1p2

Jul 08 09:53:27 example fstrim[50828]: /boot/efi: 504.9 MiB (529436672 bytes) trimmed on /dev/nvme0n1p1

Jul 08 09:53:27 example fstrim[50828]: /boot: 1.2 GiB (1269567488 bytes) trimmed on /dev/nvme0n1p2

Jul 15 09:43:17 example fstrim[46889]: /boot/efi: 504.9 MiB (529436672 bytes) trimmed on /dev/nvme0n1p1

Jul 15 09:43:17 example fstrim[46889]: /boot: 1.2 GiB (1269567488 bytes) trimmed on /dev/nvme0n1p2

Jul 22 12:33:55 example fstrim[14093]: /boot/efi: 504.9 MiB (529436672 bytes) trimmed on /dev/nvme0n1p1

Jul 22 12:33:55 example fstrim[14093]: /boot: 1.2 GiB (1267404800 bytes) trimmed on /dev/nvme0n1p2

Jul 29 10:15:54 example fstrim[63916]: /boot/efi: 504.9 MiB (529436672 bytes) trimmed on /dev/nvme0n1p1

Jul 29 10:15:54 example fstrim[63916]: /boot: 1.2 GiB (1267404800 bytes) trimmed on /dev/nvme0n1p2

Aug 05 09:37:39 example fstrim[46612]: /boot/efi: 504.9 MiB (529436672 bytes) trimmed on /dev/nvme0n1p1

Aug 05 09:37:39 example fstrim[46612]: /boot: 1.2 GiB (1269469184 bytes) trimmed on /dev/nvme0n1p2

Both device are built-in, not connected via USB

Smartctl

smartctl 7.2 2020-12-30 r5155 [x86_64-linux-6.8.0-40-generic] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Number: KBG40ZNT512G TOSHIBA MEMORY

Serial Number: 30TPxxxxxxx

Firmware Version: 0109AELA

PCI Vendor/Subsystem ID: 0x1e0f

IEEE OUI Identifier: 0x8ce38e

Total NVM Capacity: 512.110.190.592 [512 GB]

Unallocated NVM Capacity: 0

Controller ID: 0

NVMe Version: 1.3

Number of Namespaces: 1

Namespace 1 Size/Capacity: 512.110.190.592 [512 GB]

Namespace 1 Formatted LBA Size: 512

Namespace 1 IEEE EUI-64: 8ce38e 0400911b0e

Local Time is: Tue Aug 20 08:58:37 2024 CEST

Firmware Updates (0x14): 2 Slots, no Reset required

Optional Admin Commands (0x001f): Security Format Frmw_DL NS_Mngmt Self_Test

Optional NVM Commands (0x005f): Comp Wr_Unc DS_Mngmt Wr_Zero Sav/Sel_Feat Timestmp

Log Page Attributes (0x0e): Cmd_Eff_Lg Ext_Get_Lg Telmtry_Lg

Maximum Data Transfer Size: 512 Pages

Warning Comp. Temp. Threshold: 82 Celsius

Critical Comp. Temp. Threshold: 86 Celsius

Supported Power States

St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat

0 + 3.50W - - 0 0 0 0 1 1

1 + 2.60W - - 1 1 1 1 1 1

2 + 1.80W - - 2 2 2 2 1 1

3 - 0.0500W - - 4 4 4 4 800 1200

4 - 0.0050W - - 4 4 4 4 3000 32000

Supported LBA Sizes (NSID 0x1)

Id Fmt Data Metadt Rel_Perf

0 + 512 0 3

1 - 4096 0 1

=== START OF SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

SMART/Health Information (NVMe Log 0x02)

Critical Warning: 0x00

Temperature: 29 Celsius

Available Spare: 100%

Available Spare Threshold: 10%

Percentage Used: 12%

Data Units Read: 41.196.433 [21,0 TB]

Data Units Written: 54.357.811 [27,8 TB]

Host Read Commands: 792.033.014

Host Write Commands: 1.093.950.301

Controller Busy Time: 10.506

Power Cycles: 3.308

Power On Hours: 6.391

Unsafe Shutdowns: 4

Media and Data Integrity Errors: 0

Error Information Log Entries: 44

Warning Comp. Temperature Time: 0

Critical Comp. Temperature Time: 0

Temperature Sensor 1: 29 Celsius

Error Information (NVMe Log 0x01, 16 of 256 entries)

No Errors Logged