I understand both terms, linear regression and maximum likelihood, but, when it comes to the math, I am totally lost. So I am reading this article The Principle of Maximum Likelihood (by Suriyadeepan Ramamoorthy). It is really well written, but, as mentioned in the previous sentence, I don't get the math.

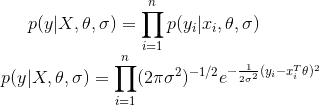

The joint probability distribution of $y,\theta, \sigma$ is given by (assuming $y$ is normally distributed):

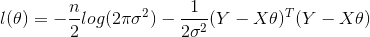

This equivalent to maximizing the log likelihood:

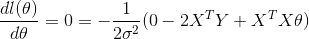

The maxima can be then equating through the derivative of l(θ) to zero:

I get everything until this point, but don't understand how this function is equivalent to the previous one :