I've been reading Google's DeepMind Atari paper and I'm trying to understand how to implement experience replay.

Do we update the parameters $\theta$ of function $Q$ once for all the samples of the minibatch, or do we do that for each sample of the minibatch separately?

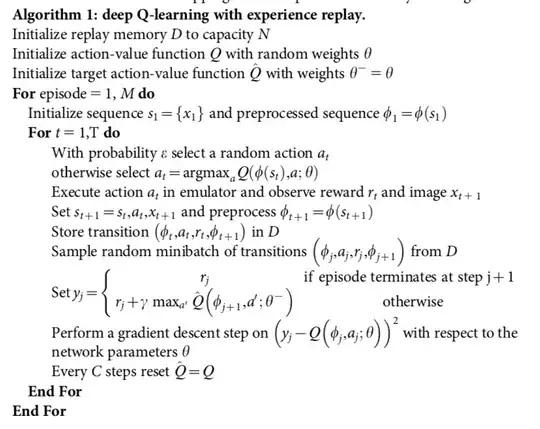

According to the following code from this paper, it performs the gradient descent on loss term for the $j$-th sample. However, I have seen other papers (referring to this paper) that say that we first calculate the sum of loss terms for all samples of the minibatch and then perform the gradient descent on this sum of losses.