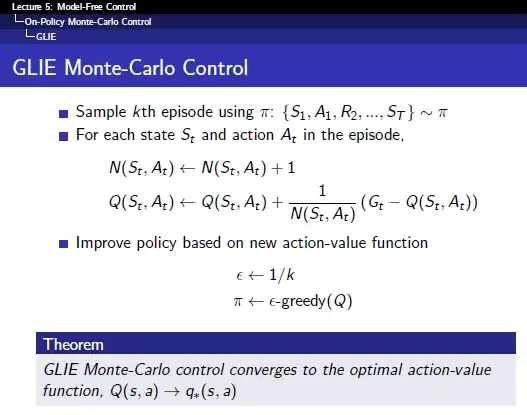

In this case, $\pi$ has always been an $\epsilon$-greedy policy. In every iteration, this $\pi$ is used to generate ($\epsilon$-greedily) a trajectory from which the new $Q(s, a)$ values are calculated. The last line in the "pseudocode" tells you that the policy $\pi$ will be a new $\epsilon$-greedy policy in the next iteration. Since the policy that is improved and the policy that is sampled are the same, the learning method is considered an on-policy method.

If the last line was $\mu \leftarrow \epsilon\text{-greedy}(Q)$, it would be an off-policy method.