Summary

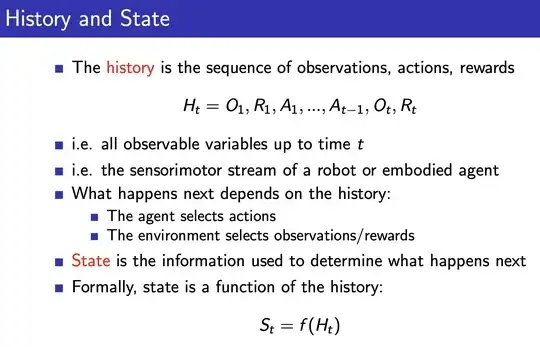

In David Silver's RL lecture slides, he defines the State $S_t$ formally as a function of the history:

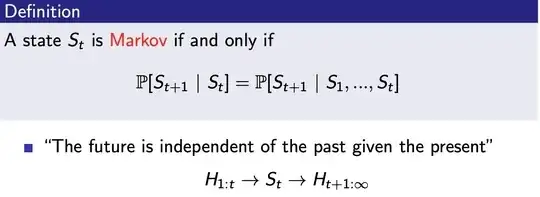

David then goes on to define the Markov state as any state $S_t$ such that the probability of the next timestep is conditionally independent of all other timesteps given $S_t$, including a second detail about how this also implies the Markov chain $H_{1:t} \to S_t \to H_{t:\infty}$:

Confusion

I am immediately thrown off by this definition. First of all, the state is defined as $f$, which can be any function. So is the constant function $f(H_t) = 1$ a state? The state $S_t = 1$, $t ∈ \mathbb{R}_+$ must also be a Markov state because it satisfies the definition of a Markov state:

$$\mathbb{P}[S_{t+1} \mid S_t] = \mathbb{P}[S_{t+1} \mid S_1, \dots, S_t].$$

If we worry about $S_t$ not being a probability distribution (though it is), the same thing applies if we instead define $f$ as $f(H_t) \sim \mathcal{N}(0, 1)$, $t ∈ \mathbb{R}_+$.

$S_t = f(H_t) = 1$ cannot possibly imply the Markov chain $H_{1:t} \to S_t \to H_{t:\infty}$, as the history $H$ is quite informative, and a constant function that throws away all relevant information would definitely not make it a sufficient statistic of the history.

I hope somebody can rigorously explain to me what I am missing from this definition. Another thing I notice is that David did not define $H_t$ as a random variable, though the fact that $f(H_t)$ is a random variable would imply otherwise.