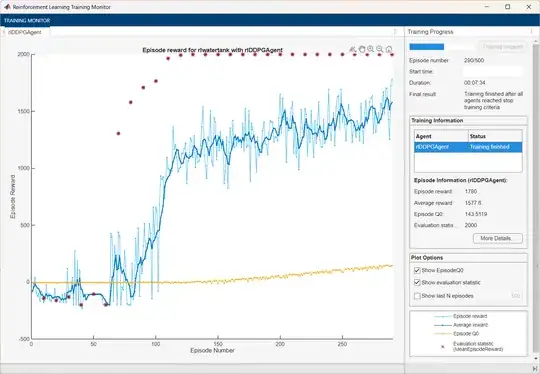

I am new to reinforcement learning topic. I tried to re-train DDPG control this DDPG control example using exact same configuration (except the max training episode set to 500) and this is the result I got:

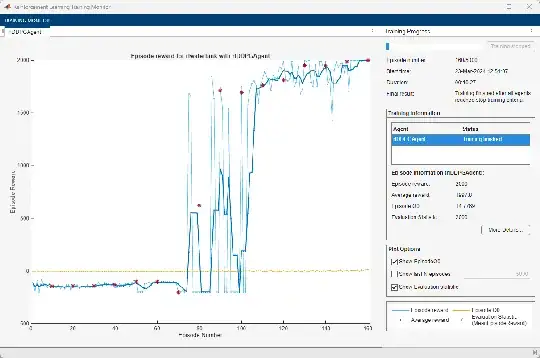

It is quite different compared to the provided screen capture (below), despite the random seed has been fixed reproducibility purpose.

Even though my training process was successful (evaluation statistic hit 2000), the last training episodes still couldn't match evaluation episodes. How to properly interpret this result? Is this normal?