Intro

I am attempting to approximate the time it takes for a Transformer to generate tokens given a GPU.

Based on ran experiments, the below approach significantly underestimate the actual runtime. The model's runtime does not scale in any resembling manner to what has been measured—particularly as n_ctx increases when more tokens are generated and re-inputted into the Transformer. Correct me if I am wrong, but this rules out the arbitrary use of FP32 instead of FP16 in the FLOPs of an A100.

Here is the approach:

FLOPS_per_singular_foward_pass = 2N + 2n_layer * n_ctx * d_attn (Neural Scaling Laws)

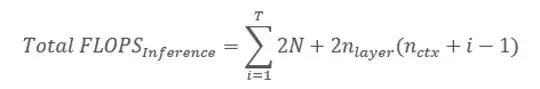

Therefore the total FLOPS for a inference call of T tokens results in this many total FLOPS:

FLOPS_per_second_A100 = 19.5 Tera FLOPS per second @ FP32 (NVIDIA Spec sheet)

time = Total_FLOPS_Inference / FLOPS_per_second_A100

Question

Why does this abstraction not work at all when T is not 1?

Feel free to school me.