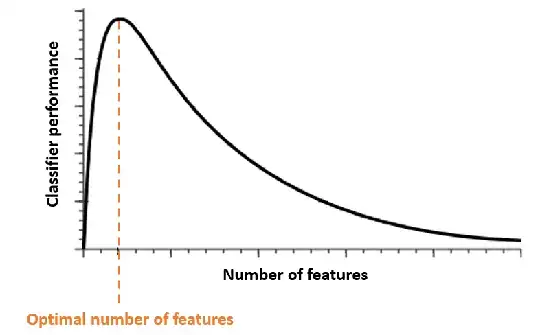

We know that the performance of machine learning model become worse if we feed the model with a few features and many features (high dimensional data). This is known as the curse of dimensionality.

The relationship between performance and the num of features/dimensions based on the most Google search is similar as follow, where the idea is finding the fit number of dimension in order to achieve the best performance:

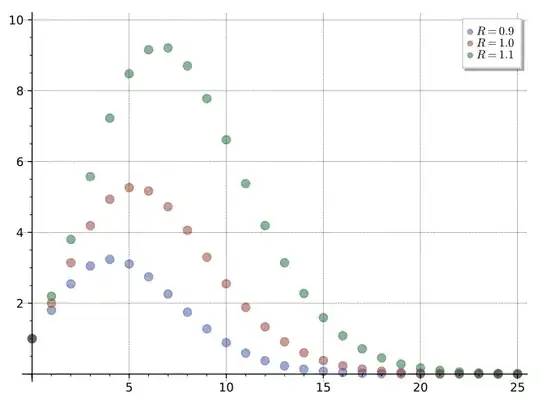

On the other hand, the graphic looks similar with the volume over n-dimensional ball. Where the x-axis represents n-dimension of ball and y-axis represents volume (for n=2, it's called area of circle):

I mean, indeed that we can calculate the volume of n-dimensional vector embedding using that hyper volume formula.

$V_n(r)=\frac{\pi^{\frac{n}{2}}r^{n}}{\Gamma(1+\frac{n}{2})}$

So, is there a metric $M$ (analog to cosine similarity, euclidean distance, etc) that using Gamma function?

Maybe it's the ratio between two vectors using hyper volume: $M(a,b)=\frac{V(||a||)}{V(||b||)}$

So, what is the relationship between curse of dimensionality, volume in n-dimensional ball, and what it to do with n-dimensional vector embedding?