Do computational graphs predate the era of machine learning? If so, who first devised the idea of a computational graph?

2 Answers

The idea of representing computations as graphs goes back well before the modern era of ML, especially with the reinvention and success of backpropagation in the mid-1980's. For example, the notion of representing a mathematical expression as a tree as a special kind of DAG is a classical idea that dates to the early days of compiler design in the 1950s and 1960s. Researchers such as Donald Knuth and others developed these ideas in the context of parsing and optimizing expressions and you may further refer Knuth's 1965 paper "On the Translation of Languages from Left to Right" where Knuth discussed techniques for parsing expressions using tree structures, which is one of the earliest documented uses of what we now call expression trees in compiler design.

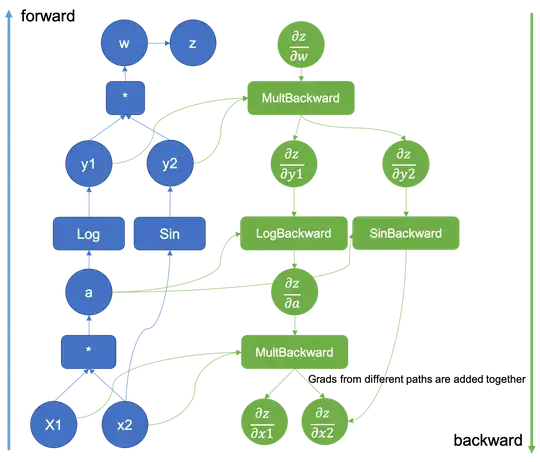

When it comes to applying the chain rule for differentiation, one of the earliest explicit uses of what we now call a computational graph was in Seppo Linnainmaa 1970 master's thesis on reverse‐mode automatic differentiation.

Explicit, efficient error backpropagation in arbitrary, discrete, possibly sparsely connected, neural networks-like networks was first described in Linnainmaa's 1970 master's thesis, albeit without reference to NNs, when he introduced the reverse mode of automatic differentiation (AD), in order to efficiently compute the derivative of a differentiable composite function that can be represented as a graph, by recursively applying the chain rule to the building blocks of the function. Linnainmaa published it first, following Gerardi Ostrowski who had used it in the context of certain process models in chemical engineering some five years earlier, but didn't publish.

Of course the era of ML itself needs to be clarified. If could be said to begin in the 1950's, but it's controversial and might be traced back much earlier. And modern success of ML could be said to begin in the mid-1980's with the main success and popularization of backpropagation.

Although the earliest machine learning model was introduced in the 1950s when Arthur Samuel invented a program that calculated the winning chance in checkers for each side, the history of machine learning roots back to decades of human desire and effort to study human cognitive processes. In 1949, Canadian psychologist Donald Hebb published the book The Organization of Behavior, in which he introduced a theoretical neural structure formed by certain interactions among nerve cells.

Neural networks research had been abandoned by AI and computer science around the same time. This line, too, was continued outside the AI/CS field, as "connectionism", by researchers from other disciplines including John Hopfield, David Rumelhart, and Geoffrey Hinton. Their main success came in the mid-1980s with the reinvention of backpropagation.

- 11,000

- 3

- 8

- 17

According to Goodfellow et al., Deep Learning §6.6 "Historical Notes" p. 221:

Efficient applications of the chain rule based on dynamic programming began to appear in the 1960s and 1970s, mostly for control applications (Kelley, 1960; Bryson and Denham, 1961; Dreyfus, 1962; Bryson and Ho, 1969; Dreyfus, 1973) but also for sensitivity analysis (Linnainmaa, 1976). Werbos (1981) proposed applying these techniques to training artificial neural networks. The idea was finally developed in practice after being independently rediscovered in different ways (LeCun, 1985; Parker, 1985; Rumelhart et al., 1986a). The book Parallel Distributed Processing presented the results of some of the first successful experiments with back-propagation in a chapter (Rumelhart et al., 1986b) that contributed greatly to the popularization of back-propagation and initiated a very active period of research in multilayer neural networks.

• Kelley, H. J. (1960). Gradient theory of optimal flight paths. ARS Journal, 30(10), 947–954.

• Bryson, Jr., A. E. and Denham, W. F. (1961). A steepest-ascent method for solving optimum programming problems. Technical Report BR-1303, Raytheon Company, Missle and Space Division.

• Dreyfus, S. E. (1962). The numerical solution of variational problems. Journal of Mathematical Analysis and Applications, 5(1), 30–45.

• Bryson, A. and Ho, Y. (1969). Applied optimal control: optimization, estimation, and control. Blaisdell Pub. Co.

• Dreyfus, S. E. (1973). The computational solution of optimal control problems with time lag. IEEE Transactions on Automatic Control, 18(4), 383–385.

• Linnainmaa, S. (1976). Taylor expansion of the accumulated rounding error. BIT Numerical Mathematics, 16(2), 146–160.

• Werbos, P. J. (1981). Applications of advances in nonlinear sensitivity analysis. In Proceedings of the 10th IFIP Conference, 31.8 - 4.9, NYC, pages 762–770.

• LeCun, Y. (1985). Une procédure d’apprentissage pour Réseau à seuil assymétrique. In Cognitiva 85: A la Frontière de l’Intelligence Artificielle, des Sciences de la Connaissance et des Neurosciences, pages 599–604, Paris 1985. CESTA, Paris.

• Parker, D. B. (1985). Learning-logic. Technical Report TR-47, Center for Comp. Research in Economics and Management Sci., MIT.

• Rumelhart, D., Hinton, G., and Williams, R. (1986a). Learning representations by back-propagating errors. Nature, 323, 533–536.

• Rumelhart, D. E., Hinton, G. E., and Williams, R. J. (1986b). Learning internal representations by error propagation. In D. E. Rumelhart and J. L. McClelland, editors, Parallel Distributed Processing, volume 1, chapter 8, pages 318–362. MIT Press, Cambridge.

- 555

- 1

- 5

- 12