If I train an autoencoder until it's overfit from the training set, doesn't it mean it's good? I mean, since it learns the entire training set (which is considered non-anomalous), then when it meets the new data, it's supposed to be anomalous due to out of distribution?

- 800

- 3

- 10

1 Answers

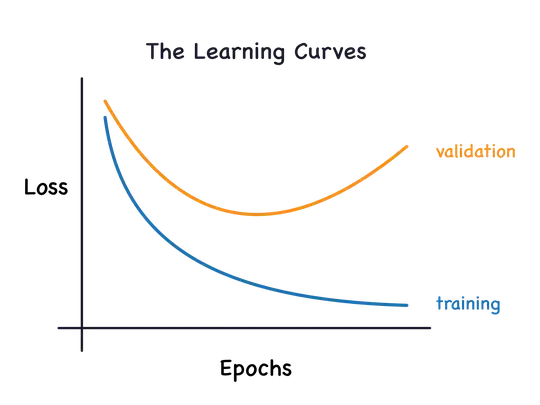

When using an autoencoder for anomaly detection, overfitting is not nearly as bad as it would be for other applications, but it's still not ideal. Consider this example:

In one of my classes we teach students how to use an autoencoder to identify anomalous network traffic. To do so, we train an ensemble of autoencoders, one for each protocol. Training requires that we have examples of each protocol to be identified.

How realistic is it that our training data has every possible example of a particular class? Unless the network protocol is very simple, this is effectively zero. Given that we do not have complete coverage of the problem space, the more we overfit to the training data for a particular protocol, the more likely it is that non-anomalous examples will fail with a high reconstruction loss just because they are not near enough to the training data on which the autoencoder has overfit. The more overfit we are, the worse this problem gets.

- 1,198

- 11

- 21